mirror of

https://github.com/hibiken/asynq.git

synced 2025-10-20 21:26:14 +08:00

Compare commits

53 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

a19909f5f4 | ||

|

|

cea5110d15 | ||

|

|

9b63e23274 | ||

|

|

de25201d9f | ||

|

|

ec560afb01 | ||

|

|

d4006894ad | ||

|

|

59927509d8 | ||

|

|

8211167de2 | ||

|

|

d7169cd445 | ||

|

|

dfae8638e1 | ||

|

|

b9943de2ab | ||

|

|

871474f220 | ||

|

|

87dc392c7f | ||

|

|

dabcb120d5 | ||

|

|

bc2f1986d7 | ||

|

|

b8cb579407 | ||

|

|

bca624792c | ||

|

|

d865d89900 | ||

|

|

852af7abd1 | ||

|

|

5490d2c625 | ||

|

|

ebd7a32c0f | ||

|

|

55d0610a03 | ||

|

|

ab8a4f5b1e | ||

|

|

d7ceb0c090 | ||

|

|

8bd70c6f84 | ||

|

|

10ab4e3745 | ||

|

|

349f4c50fb | ||

|

|

dff2e3a336 | ||

|

|

65040af7b5 | ||

|

|

053fe2d1ee | ||

|

|

25832e5e95 | ||

|

|

aa26f3819e | ||

|

|

d94614bb9b | ||

|

|

ce46b07652 | ||

|

|

2d0170541c | ||

|

|

c1f08106da | ||

|

|

74cf804197 | ||

|

|

8dfabfccb3 | ||

|

|

5f20edcbd1 | ||

|

|

1ddb2f7bce | ||

|

|

82d18e3d91 | ||

|

|

43cb4ddf19 | ||

|

|

ddfc6747a1 | ||

|

|

970cb7a606 | ||

|

|

157e97e72e | ||

|

|

22e6c9d297 | ||

|

|

99a6750656 | ||

|

|

e7c1c3ad6f | ||

|

|

c9183374c5 | ||

|

|

6e7106c8f2 | ||

|

|

9f2c321e98 | ||

|

|

e2b61c9056 | ||

|

|

531d1ef089 |

13

.github/workflows/build.yml

vendored

13

.github/workflows/build.yml

vendored

@@ -22,12 +22,21 @@ jobs:

|

|||||||

with:

|

with:

|

||||||

go-version: ${{ matrix.go-version }}

|

go-version: ${{ matrix.go-version }}

|

||||||

|

|

||||||

- name: Build

|

- name: Build core module

|

||||||

run: go build -v ./...

|

run: go build -v ./...

|

||||||

|

|

||||||

- name: Test

|

- name: Build x module

|

||||||

|

run: cd x && go build -v ./... && cd ..

|

||||||

|

|

||||||

|

- name: Build tools module

|

||||||

|

run: cd tools && go build -v ./... && cd ..

|

||||||

|

|

||||||

|

- name: Test core module

|

||||||

run: go test -race -v -coverprofile=coverage.txt -covermode=atomic ./...

|

run: go test -race -v -coverprofile=coverage.txt -covermode=atomic ./...

|

||||||

|

|

||||||

|

- name: Test x module

|

||||||

|

run: cd x && go test -race -v ./... && cd ..

|

||||||

|

|

||||||

- name: Benchmark Test

|

- name: Benchmark Test

|

||||||

run: go test -run=^$ -bench=. -loglevel=debug ./...

|

run: go test -run=^$ -bench=. -loglevel=debug ./...

|

||||||

|

|

||||||

|

|||||||

4

.gitignore

vendored

4

.gitignore

vendored

@@ -1,3 +1,4 @@

|

|||||||

|

vendor

|

||||||

# Binaries for programs and plugins

|

# Binaries for programs and plugins

|

||||||

*.exe

|

*.exe

|

||||||

*.exe~

|

*.exe~

|

||||||

@@ -14,8 +15,9 @@

|

|||||||

# Ignore examples for now

|

# Ignore examples for now

|

||||||

/examples

|

/examples

|

||||||

|

|

||||||

# Ignore command binary

|

# Ignore tool binaries

|

||||||

/tools/asynq/asynq

|

/tools/asynq/asynq

|

||||||

|

/tools/metrics_exporter/metrics_exporter

|

||||||

|

|

||||||

# Ignore asynq config file

|

# Ignore asynq config file

|

||||||

.asynq.*

|

.asynq.*

|

||||||

|

|||||||

38

CHANGELOG.md

38

CHANGELOG.md

@@ -7,6 +7,44 @@ and this project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0

|

|||||||

|

|

||||||

## [Unreleased]

|

## [Unreleased]

|

||||||

|

|

||||||

|

## [0.21.0] - 2022-02-19

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `BaseContext` is introduced in `Config` to specify callback hook to provide a base `context` from which `Handler` `context` is derived

|

||||||

|

- `IsOrphaned` field is added to `TaskInfo` to describe a task left in active state with no worker processing it.

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- `Server` now recovers tasks with an expired lease. Recovered tasks are retried/archived with `ErrLeaseExpired` error.

|

||||||

|

|

||||||

|

## [0.21.0] - 2022-01-22

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `PeriodicTaskManager` is added. Prefer using this over `Scheduler` as it has better support for dynamic periodic tasks.

|

||||||

|

- The `asynq stats` command now supports a `--json` option, making its output a JSON object

|

||||||

|

- Introduced new configuration for `DelayedTaskCheckInterval`. See [godoc](https://godoc.org/github.com/hibiken/asynq) for more details.

|

||||||

|

|

||||||

|

## [0.20.0] - 2021-12-19

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- Package `x/metrics` is added.

|

||||||

|

- Tool `tools/metrics_exporter` binary is added.

|

||||||

|

- `ProcessedTotal` and `FailedTotal` fields were added to `QueueInfo` struct.

|

||||||

|

|

||||||

|

## [0.19.1] - 2021-12-12

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `Latency` field is added to `QueueInfo`.

|

||||||

|

- `EnqueueContext` method is added to `Client`.

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixed an error when user pass a duration less than 1s to `Unique` option

|

||||||

|

|

||||||

## [0.19.0] - 2021-11-06

|

## [0.19.0] - 2021-11-06

|

||||||

|

|

||||||

### Changed

|

### Changed

|

||||||

|

|||||||

128

CODE_OF_CONDUCT.md

Normal file

128

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,128 @@

|

|||||||

|

# Contributor Covenant Code of Conduct

|

||||||

|

|

||||||

|

## Our Pledge

|

||||||

|

|

||||||

|

We as members, contributors, and leaders pledge to make participation in our

|

||||||

|

community a harassment-free experience for everyone, regardless of age, body

|

||||||

|

size, visible or invisible disability, ethnicity, sex characteristics, gender

|

||||||

|

identity and expression, level of experience, education, socio-economic status,

|

||||||

|

nationality, personal appearance, race, religion, or sexual identity

|

||||||

|

and orientation.

|

||||||

|

|

||||||

|

We pledge to act and interact in ways that contribute to an open, welcoming,

|

||||||

|

diverse, inclusive, and healthy community.

|

||||||

|

|

||||||

|

## Our Standards

|

||||||

|

|

||||||

|

Examples of behavior that contributes to a positive environment for our

|

||||||

|

community include:

|

||||||

|

|

||||||

|

* Demonstrating empathy and kindness toward other people

|

||||||

|

* Being respectful of differing opinions, viewpoints, and experiences

|

||||||

|

* Giving and gracefully accepting constructive feedback

|

||||||

|

* Accepting responsibility and apologizing to those affected by our mistakes,

|

||||||

|

and learning from the experience

|

||||||

|

* Focusing on what is best not just for us as individuals, but for the

|

||||||

|

overall community

|

||||||

|

|

||||||

|

Examples of unacceptable behavior include:

|

||||||

|

|

||||||

|

* The use of sexualized language or imagery, and sexual attention or

|

||||||

|

advances of any kind

|

||||||

|

* Trolling, insulting or derogatory comments, and personal or political attacks

|

||||||

|

* Public or private harassment

|

||||||

|

* Publishing others' private information, such as a physical or email

|

||||||

|

address, without their explicit permission

|

||||||

|

* Other conduct which could reasonably be considered inappropriate in a

|

||||||

|

professional setting

|

||||||

|

|

||||||

|

## Enforcement Responsibilities

|

||||||

|

|

||||||

|

Community leaders are responsible for clarifying and enforcing our standards of

|

||||||

|

acceptable behavior and will take appropriate and fair corrective action in

|

||||||

|

response to any behavior that they deem inappropriate, threatening, offensive,

|

||||||

|

or harmful.

|

||||||

|

|

||||||

|

Community leaders have the right and responsibility to remove, edit, or reject

|

||||||

|

comments, commits, code, wiki edits, issues, and other contributions that are

|

||||||

|

not aligned to this Code of Conduct, and will communicate reasons for moderation

|

||||||

|

decisions when appropriate.

|

||||||

|

|

||||||

|

## Scope

|

||||||

|

|

||||||

|

This Code of Conduct applies within all community spaces, and also applies when

|

||||||

|

an individual is officially representing the community in public spaces.

|

||||||

|

Examples of representing our community include using an official e-mail address,

|

||||||

|

posting via an official social media account, or acting as an appointed

|

||||||

|

representative at an online or offline event.

|

||||||

|

|

||||||

|

## Enforcement

|

||||||

|

|

||||||

|

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||||

|

reported to the community leaders responsible for enforcement at

|

||||||

|

ken.hibino7@gmail.com.

|

||||||

|

All complaints will be reviewed and investigated promptly and fairly.

|

||||||

|

|

||||||

|

All community leaders are obligated to respect the privacy and security of the

|

||||||

|

reporter of any incident.

|

||||||

|

|

||||||

|

## Enforcement Guidelines

|

||||||

|

|

||||||

|

Community leaders will follow these Community Impact Guidelines in determining

|

||||||

|

the consequences for any action they deem in violation of this Code of Conduct:

|

||||||

|

|

||||||

|

### 1. Correction

|

||||||

|

|

||||||

|

**Community Impact**: Use of inappropriate language or other behavior deemed

|

||||||

|

unprofessional or unwelcome in the community.

|

||||||

|

|

||||||

|

**Consequence**: A private, written warning from community leaders, providing

|

||||||

|

clarity around the nature of the violation and an explanation of why the

|

||||||

|

behavior was inappropriate. A public apology may be requested.

|

||||||

|

|

||||||

|

### 2. Warning

|

||||||

|

|

||||||

|

**Community Impact**: A violation through a single incident or series

|

||||||

|

of actions.

|

||||||

|

|

||||||

|

**Consequence**: A warning with consequences for continued behavior. No

|

||||||

|

interaction with the people involved, including unsolicited interaction with

|

||||||

|

those enforcing the Code of Conduct, for a specified period of time. This

|

||||||

|

includes avoiding interactions in community spaces as well as external channels

|

||||||

|

like social media. Violating these terms may lead to a temporary or

|

||||||

|

permanent ban.

|

||||||

|

|

||||||

|

### 3. Temporary Ban

|

||||||

|

|

||||||

|

**Community Impact**: A serious violation of community standards, including

|

||||||

|

sustained inappropriate behavior.

|

||||||

|

|

||||||

|

**Consequence**: A temporary ban from any sort of interaction or public

|

||||||

|

communication with the community for a specified period of time. No public or

|

||||||

|

private interaction with the people involved, including unsolicited interaction

|

||||||

|

with those enforcing the Code of Conduct, is allowed during this period.

|

||||||

|

Violating these terms may lead to a permanent ban.

|

||||||

|

|

||||||

|

### 4. Permanent Ban

|

||||||

|

|

||||||

|

**Community Impact**: Demonstrating a pattern of violation of community

|

||||||

|

standards, including sustained inappropriate behavior, harassment of an

|

||||||

|

individual, or aggression toward or disparagement of classes of individuals.

|

||||||

|

|

||||||

|

**Consequence**: A permanent ban from any sort of public interaction within

|

||||||

|

the community.

|

||||||

|

|

||||||

|

## Attribution

|

||||||

|

|

||||||

|

This Code of Conduct is adapted from the [Contributor Covenant][homepage],

|

||||||

|

version 2.0, available at

|

||||||

|

https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

|

||||||

|

|

||||||

|

Community Impact Guidelines were inspired by [Mozilla's code of conduct

|

||||||

|

enforcement ladder](https://github.com/mozilla/diversity).

|

||||||

|

|

||||||

|

[homepage]: https://www.contributor-covenant.org

|

||||||

|

|

||||||

|

For answers to common questions about this code of conduct, see the FAQ at

|

||||||

|

https://www.contributor-covenant.org/faq. Translations are available at

|

||||||

|

https://www.contributor-covenant.org/translations.

|

||||||

@@ -38,6 +38,7 @@ Task queues are used as a mechanism to distribute work across multiple machines.

|

|||||||

- [Periodic Tasks](https://github.com/hibiken/asynq/wiki/Periodic-Tasks)

|

- [Periodic Tasks](https://github.com/hibiken/asynq/wiki/Periodic-Tasks)

|

||||||

- [Support Redis Cluster](https://github.com/hibiken/asynq/wiki/Redis-Cluster) for automatic sharding and high availability

|

- [Support Redis Cluster](https://github.com/hibiken/asynq/wiki/Redis-Cluster) for automatic sharding and high availability

|

||||||

- [Support Redis Sentinels](https://github.com/hibiken/asynq/wiki/Automatic-Failover) for high availability

|

- [Support Redis Sentinels](https://github.com/hibiken/asynq/wiki/Automatic-Failover) for high availability

|

||||||

|

- Integration with [Prometheus](https://prometheus.io/) to collect and visualize queue metrics

|

||||||

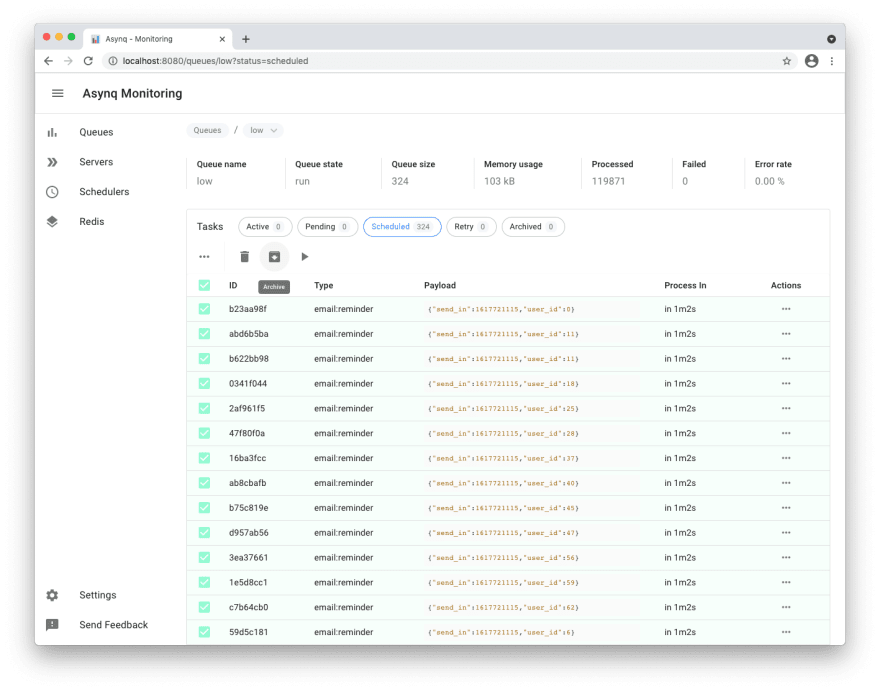

- [Web UI](#web-ui) to inspect and remote-control queues and tasks

|

- [Web UI](#web-ui) to inspect and remote-control queues and tasks

|

||||||

- [CLI](#command-line-tool) to inspect and remote-control queues and tasks

|

- [CLI](#command-line-tool) to inspect and remote-control queues and tasks

|

||||||

|

|

||||||

@@ -65,8 +66,11 @@ Next, write a package that encapsulates task creation and task handling.

|

|||||||

package tasks

|

package tasks

|

||||||

|

|

||||||

import (

|

import (

|

||||||

|

"context"

|

||||||

|

"encoding/json"

|

||||||

"fmt"

|

"fmt"

|

||||||

|

"log"

|

||||||

|

"time"

|

||||||

"github.com/hibiken/asynq"

|

"github.com/hibiken/asynq"

|

||||||

)

|

)

|

||||||

|

|

||||||

@@ -271,6 +275,9 @@ Here's a few screenshots of the Web UI:

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**Metrics view**

|

||||||

|

<img width="1532" alt="Screen Shot 2021-12-19 at 4 37 19 PM" src="https://user-images.githubusercontent.com/10953044/146777420-cae6c476-bac6-469c-acce-b2f6584e8707.png">

|

||||||

|

|

||||||

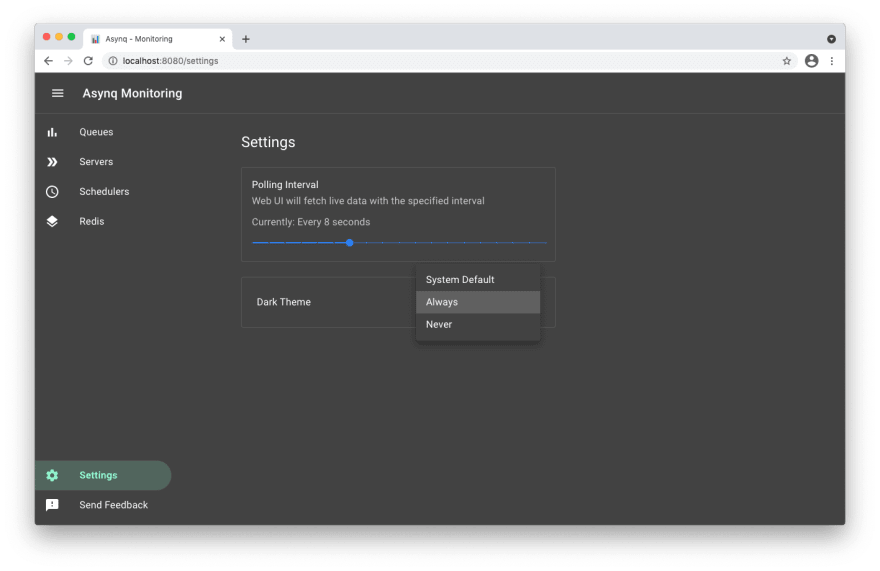

**Settings and adaptive dark mode**

|

**Settings and adaptive dark mode**

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

8

asynq.go

8

asynq.go

@@ -101,6 +101,14 @@ type TaskInfo struct {

|

|||||||

// zero if not applicable.

|

// zero if not applicable.

|

||||||

NextProcessAt time.Time

|

NextProcessAt time.Time

|

||||||

|

|

||||||

|

// IsOrphaned describes whether the task is left in active state with no worker processing it.

|

||||||

|

// An orphaned task indicates that the worker has crashed or experienced network failures and was not able to

|

||||||

|

// extend its lease on the task.

|

||||||

|

//

|

||||||

|

// This task will be recovered by running a server against the queue the task is in.

|

||||||

|

// This field is only applicable to tasks with TaskStateActive.

|

||||||

|

IsOrphaned bool

|

||||||

|

|

||||||

// Retention is duration of the retention period after the task is successfully processed.

|

// Retention is duration of the retention period after the task is successfully processed.

|

||||||

Retention time.Duration

|

Retention time.Duration

|

||||||

|

|

||||||

|

|||||||

44

client.go

44

client.go

@@ -5,6 +5,7 @@

|

|||||||

package asynq

|

package asynq

|

||||||

|

|

||||||

import (

|

import (

|

||||||

|

"context"

|

||||||

"fmt"

|

"fmt"

|

||||||

"strings"

|

"strings"

|

||||||

"time"

|

"time"

|

||||||

@@ -143,6 +144,7 @@ func (t deadlineOption) Value() interface{} { return time.Time(t) }

|

|||||||

// Task enqueued with this option is guaranteed to be unique within the given ttl.

|

// Task enqueued with this option is guaranteed to be unique within the given ttl.

|

||||||

// Once the task gets processed successfully or once the TTL has expired, another task with the same uniqueness may be enqueued.

|

// Once the task gets processed successfully or once the TTL has expired, another task with the same uniqueness may be enqueued.

|

||||||

// ErrDuplicateTask error is returned when enqueueing a duplicate task.

|

// ErrDuplicateTask error is returned when enqueueing a duplicate task.

|

||||||

|

// TTL duration must be greater than or equal to 1 second.

|

||||||

//

|

//

|

||||||

// Uniqueness of a task is based on the following properties:

|

// Uniqueness of a task is based on the following properties:

|

||||||

// - Task Type

|

// - Task Type

|

||||||

@@ -246,7 +248,11 @@ func composeOptions(opts ...Option) (option, error) {

|

|||||||

case deadlineOption:

|

case deadlineOption:

|

||||||

res.deadline = time.Time(opt)

|

res.deadline = time.Time(opt)

|

||||||

case uniqueOption:

|

case uniqueOption:

|

||||||

res.uniqueTTL = time.Duration(opt)

|

ttl := time.Duration(opt)

|

||||||

|

if ttl < 1*time.Second {

|

||||||

|

return option{}, errors.New("Unique TTL cannot be less than 1s")

|

||||||

|

}

|

||||||

|

res.uniqueTTL = ttl

|

||||||

case processAtOption:

|

case processAtOption:

|

||||||

res.processAt = time.Time(opt)

|

res.processAt = time.Time(opt)

|

||||||

case processInOption:

|

case processInOption:

|

||||||

@@ -287,7 +293,7 @@ func (c *Client) Close() error {

|

|||||||

return c.rdb.Close()

|

return c.rdb.Close()

|

||||||

}

|

}

|

||||||

|

|

||||||

// Enqueue enqueues the given task to be processed asynchronously.

|

// Enqueue enqueues the given task to a queue.

|

||||||

//

|

//

|

||||||

// Enqueue returns TaskInfo and nil error if the task is enqueued successfully, otherwise returns a non-nil error.

|

// Enqueue returns TaskInfo and nil error if the task is enqueued successfully, otherwise returns a non-nil error.

|

||||||

//

|

//

|

||||||

@@ -297,7 +303,25 @@ func (c *Client) Close() error {

|

|||||||

// By deafult, max retry is set to 25 and timeout is set to 30 minutes.

|

// By deafult, max retry is set to 25 and timeout is set to 30 minutes.

|

||||||

//

|

//

|

||||||

// If no ProcessAt or ProcessIn options are provided, the task will be pending immediately.

|

// If no ProcessAt or ProcessIn options are provided, the task will be pending immediately.

|

||||||

|

//

|

||||||

|

// Enqueue uses context.Background internally; to specify the context, use EnqueueContext.

|

||||||

func (c *Client) Enqueue(task *Task, opts ...Option) (*TaskInfo, error) {

|

func (c *Client) Enqueue(task *Task, opts ...Option) (*TaskInfo, error) {

|

||||||

|

return c.EnqueueContext(context.Background(), task, opts...)

|

||||||

|

}

|

||||||

|

|

||||||

|

// EnqueueContext enqueues the given task to a queue.

|

||||||

|

//

|

||||||

|

// EnqueueContext returns TaskInfo and nil error if the task is enqueued successfully, otherwise returns a non-nil error.

|

||||||

|

//

|

||||||

|

// The argument opts specifies the behavior of task processing.

|

||||||

|

// If there are conflicting Option values the last one overrides others.

|

||||||

|

// Any options provided to NewTask can be overridden by options passed to Enqueue.

|

||||||

|

// By deafult, max retry is set to 25 and timeout is set to 30 minutes.

|

||||||

|

//

|

||||||

|

// If no ProcessAt or ProcessIn options are provided, the task will be pending immediately.

|

||||||

|

//

|

||||||

|

// The first argument context applies to the enqueue operation. To specify task timeout and deadline, use Timeout and Deadline option instead.

|

||||||

|

func (c *Client) EnqueueContext(ctx context.Context, task *Task, opts ...Option) (*TaskInfo, error) {

|

||||||

if strings.TrimSpace(task.Type()) == "" {

|

if strings.TrimSpace(task.Type()) == "" {

|

||||||

return nil, fmt.Errorf("task typename cannot be empty")

|

return nil, fmt.Errorf("task typename cannot be empty")

|

||||||

}

|

}

|

||||||

@@ -338,10 +362,10 @@ func (c *Client) Enqueue(task *Task, opts ...Option) (*TaskInfo, error) {

|

|||||||

var state base.TaskState

|

var state base.TaskState

|

||||||

if opt.processAt.Before(now) || opt.processAt.Equal(now) {

|

if opt.processAt.Before(now) || opt.processAt.Equal(now) {

|

||||||

opt.processAt = now

|

opt.processAt = now

|

||||||

err = c.enqueue(msg, opt.uniqueTTL)

|

err = c.enqueue(ctx, msg, opt.uniqueTTL)

|

||||||

state = base.TaskStatePending

|

state = base.TaskStatePending

|

||||||

} else {

|

} else {

|

||||||

err = c.schedule(msg, opt.processAt, opt.uniqueTTL)

|

err = c.schedule(ctx, msg, opt.processAt, opt.uniqueTTL)

|

||||||

state = base.TaskStateScheduled

|

state = base.TaskStateScheduled

|

||||||

}

|

}

|

||||||

switch {

|

switch {

|

||||||

@@ -355,17 +379,17 @@ func (c *Client) Enqueue(task *Task, opts ...Option) (*TaskInfo, error) {

|

|||||||

return newTaskInfo(msg, state, opt.processAt, nil), nil

|

return newTaskInfo(msg, state, opt.processAt, nil), nil

|

||||||

}

|

}

|

||||||

|

|

||||||

func (c *Client) enqueue(msg *base.TaskMessage, uniqueTTL time.Duration) error {

|

func (c *Client) enqueue(ctx context.Context, msg *base.TaskMessage, uniqueTTL time.Duration) error {

|

||||||

if uniqueTTL > 0 {

|

if uniqueTTL > 0 {

|

||||||

return c.rdb.EnqueueUnique(msg, uniqueTTL)

|

return c.rdb.EnqueueUnique(ctx, msg, uniqueTTL)

|

||||||

}

|

}

|

||||||

return c.rdb.Enqueue(msg)

|

return c.rdb.Enqueue(ctx, msg)

|

||||||

}

|

}

|

||||||

|

|

||||||

func (c *Client) schedule(msg *base.TaskMessage, t time.Time, uniqueTTL time.Duration) error {

|

func (c *Client) schedule(ctx context.Context, msg *base.TaskMessage, t time.Time, uniqueTTL time.Duration) error {

|

||||||

if uniqueTTL > 0 {

|

if uniqueTTL > 0 {

|

||||||

ttl := t.Add(uniqueTTL).Sub(time.Now())

|

ttl := t.Add(uniqueTTL).Sub(time.Now())

|

||||||

return c.rdb.ScheduleUnique(msg, t, ttl)

|

return c.rdb.ScheduleUnique(ctx, msg, t, ttl)

|

||||||

}

|

}

|

||||||

return c.rdb.Schedule(msg, t)

|

return c.rdb.Schedule(ctx, msg, t)

|

||||||

}

|

}

|

||||||

|

|||||||

@@ -734,6 +734,11 @@ func TestClientEnqueueError(t *testing.T) {

|

|||||||

task: NewTask("foo", nil),

|

task: NewTask("foo", nil),

|

||||||

opts: []Option{TaskID(" ")},

|

opts: []Option{TaskID(" ")},

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

desc: "With unique option less than 1s",

|

||||||

|

task: NewTask("foo", nil),

|

||||||

|

opts: []Option{Unique(300 * time.Millisecond)},

|

||||||

|

},

|

||||||

}

|

}

|

||||||

|

|

||||||

for _, tc := range tests {

|

for _, tc := range tests {

|

||||||

|

|||||||

@@ -5,6 +5,7 @@

|

|||||||

package asynq_test

|

package asynq_test

|

||||||

|

|

||||||

import (

|

import (

|

||||||

|

"context"

|

||||||

"fmt"

|

"fmt"

|

||||||

"log"

|

"log"

|

||||||

"os"

|

"os"

|

||||||

@@ -113,3 +114,20 @@ func ExampleParseRedisURI() {

|

|||||||

// localhost:6379

|

// localhost:6379

|

||||||

// 10

|

// 10

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func ExampleResultWriter() {

|

||||||

|

// ResultWriter is only accessible in Handler.

|

||||||

|

h := func(ctx context.Context, task *asynq.Task) error {

|

||||||

|

// .. do task processing work

|

||||||

|

|

||||||

|

res := []byte("task result data")

|

||||||

|

n, err := task.ResultWriter().Write(res) // implements io.Writer

|

||||||

|

if err != nil {

|

||||||

|

return fmt.Errorf("failed to write task result: %v", err)

|

||||||

|

}

|

||||||

|

log.Printf(" %d bytes written", n)

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

||||||

|

_ = h

|

||||||

|

}

|

||||||

|

|||||||

@@ -70,6 +70,6 @@ func (f *forwarder) start(wg *sync.WaitGroup) {

|

|||||||

|

|

||||||

func (f *forwarder) exec() {

|

func (f *forwarder) exec() {

|

||||||

if err := f.broker.ForwardIfReady(f.queues...); err != nil {

|

if err := f.broker.ForwardIfReady(f.queues...); err != nil {

|

||||||

f.logger.Errorf("Could not enqueue scheduled tasks: %v", err)

|

f.logger.Errorf("Failed to forward scheduled tasks: %v", err)

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

2

go.mod

2

go.mod

@@ -1,6 +1,6 @@

|

|||||||

module github.com/hibiken/asynq

|

module github.com/hibiken/asynq

|

||||||

|

|

||||||

go 1.13

|

go 1.14

|

||||||

|

|

||||||

require (

|

require (

|

||||||

github.com/go-redis/redis/v8 v8.11.2

|

github.com/go-redis/redis/v8 v8.11.2

|

||||||

|

|||||||

40

heartbeat.go

40

heartbeat.go

@@ -12,6 +12,7 @@ import (

|

|||||||

"github.com/google/uuid"

|

"github.com/google/uuid"

|

||||||

"github.com/hibiken/asynq/internal/base"

|

"github.com/hibiken/asynq/internal/base"

|

||||||

"github.com/hibiken/asynq/internal/log"

|

"github.com/hibiken/asynq/internal/log"

|

||||||

|

"github.com/hibiken/asynq/internal/timeutil"

|

||||||

)

|

)

|

||||||

|

|

||||||

// heartbeater is responsible for writing process info to redis periodically to

|

// heartbeater is responsible for writing process info to redis periodically to

|

||||||

@@ -19,6 +20,7 @@ import (

|

|||||||

type heartbeater struct {

|

type heartbeater struct {

|

||||||

logger *log.Logger

|

logger *log.Logger

|

||||||

broker base.Broker

|

broker base.Broker

|

||||||

|

clock timeutil.Clock

|

||||||

|

|

||||||

// channel to communicate back to the long running "heartbeater" goroutine.

|

// channel to communicate back to the long running "heartbeater" goroutine.

|

||||||

done chan struct{}

|

done chan struct{}

|

||||||

@@ -41,7 +43,7 @@ type heartbeater struct {

|

|||||||

workers map[string]*workerInfo

|

workers map[string]*workerInfo

|

||||||

|

|

||||||

// state is shared with other goroutine but is concurrency safe.

|

// state is shared with other goroutine but is concurrency safe.

|

||||||

state *base.ServerState

|

state *serverState

|

||||||

|

|

||||||

// channels to receive updates on active workers.

|

// channels to receive updates on active workers.

|

||||||

starting <-chan *workerInfo

|

starting <-chan *workerInfo

|

||||||

@@ -55,7 +57,7 @@ type heartbeaterParams struct {

|

|||||||

concurrency int

|

concurrency int

|

||||||

queues map[string]int

|

queues map[string]int

|

||||||

strictPriority bool

|

strictPriority bool

|

||||||

state *base.ServerState

|

state *serverState

|

||||||

starting <-chan *workerInfo

|

starting <-chan *workerInfo

|

||||||

finished <-chan *base.TaskMessage

|

finished <-chan *base.TaskMessage

|

||||||

}

|

}

|

||||||

@@ -69,6 +71,7 @@ func newHeartbeater(params heartbeaterParams) *heartbeater {

|

|||||||

return &heartbeater{

|

return &heartbeater{

|

||||||

logger: params.logger,

|

logger: params.logger,

|

||||||

broker: params.broker,

|

broker: params.broker,

|

||||||

|

clock: timeutil.NewRealClock(),

|

||||||

done: make(chan struct{}),

|

done: make(chan struct{}),

|

||||||

interval: params.interval,

|

interval: params.interval,

|

||||||

|

|

||||||

@@ -100,6 +103,8 @@ type workerInfo struct {

|

|||||||

started time.Time

|

started time.Time

|

||||||

// deadline the worker has to finish processing the task by.

|

// deadline the worker has to finish processing the task by.

|

||||||

deadline time.Time

|

deadline time.Time

|

||||||

|

// lease the worker holds for the task.

|

||||||

|

lease *base.Lease

|

||||||

}

|

}

|

||||||

|

|

||||||

func (h *heartbeater) start(wg *sync.WaitGroup) {

|

func (h *heartbeater) start(wg *sync.WaitGroup) {

|

||||||

@@ -107,7 +112,7 @@ func (h *heartbeater) start(wg *sync.WaitGroup) {

|

|||||||

go func() {

|

go func() {

|

||||||

defer wg.Done()

|

defer wg.Done()

|

||||||

|

|

||||||

h.started = time.Now()

|

h.started = h.clock.Now()

|

||||||

|

|

||||||

h.beat()

|

h.beat()

|

||||||

|

|

||||||

@@ -134,7 +139,12 @@ func (h *heartbeater) start(wg *sync.WaitGroup) {

|

|||||||

}()

|

}()

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// beat extends lease for workers and writes server/worker info to redis.

|

||||||

func (h *heartbeater) beat() {

|

func (h *heartbeater) beat() {

|

||||||

|

h.state.mu.Lock()

|

||||||

|

srvStatus := h.state.value.String()

|

||||||

|

h.state.mu.Unlock()

|

||||||

|

|

||||||

info := base.ServerInfo{

|

info := base.ServerInfo{

|

||||||

Host: h.host,

|

Host: h.host,

|

||||||

PID: h.pid,

|

PID: h.pid,

|

||||||

@@ -142,12 +152,13 @@ func (h *heartbeater) beat() {

|

|||||||

Concurrency: h.concurrency,

|

Concurrency: h.concurrency,

|

||||||

Queues: h.queues,

|

Queues: h.queues,

|

||||||

StrictPriority: h.strictPriority,

|

StrictPriority: h.strictPriority,

|

||||||

Status: h.state.String(),

|

Status: srvStatus,

|

||||||

Started: h.started,

|

Started: h.started,

|

||||||

ActiveWorkerCount: len(h.workers),

|

ActiveWorkerCount: len(h.workers),

|

||||||

}

|

}

|

||||||

|

|

||||||

var ws []*base.WorkerInfo

|

var ws []*base.WorkerInfo

|

||||||

|

idsByQueue := make(map[string][]string)

|

||||||

for id, w := range h.workers {

|

for id, w := range h.workers {

|

||||||

ws = append(ws, &base.WorkerInfo{

|

ws = append(ws, &base.WorkerInfo{

|

||||||

Host: h.host,

|

Host: h.host,

|

||||||

@@ -160,11 +171,30 @@ func (h *heartbeater) beat() {

|

|||||||

Started: w.started,

|

Started: w.started,

|

||||||

Deadline: w.deadline,

|

Deadline: w.deadline,

|

||||||

})

|

})

|

||||||

|

// Check lease before adding to the set to make sure not to extend the lease if the lease is already expired.

|

||||||

|

if w.lease.IsValid() {

|

||||||

|

idsByQueue[w.msg.Queue] = append(idsByQueue[w.msg.Queue], id)

|

||||||

|

} else {

|

||||||

|

w.lease.NotifyExpiration() // notify processor if the lease is expired

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

// Note: Set TTL to be long enough so that it won't expire before we write again

|

// Note: Set TTL to be long enough so that it won't expire before we write again

|

||||||

// and short enough to expire quickly once the process is shut down or killed.

|

// and short enough to expire quickly once the process is shut down or killed.

|

||||||

if err := h.broker.WriteServerState(&info, ws, h.interval*2); err != nil {

|

if err := h.broker.WriteServerState(&info, ws, h.interval*2); err != nil {

|

||||||

h.logger.Errorf("could not write server state data: %v", err)

|

h.logger.Errorf("Failed to write server state data: %v", err)

|

||||||

|

}

|

||||||

|

|

||||||

|

for qname, ids := range idsByQueue {

|

||||||

|

expirationTime, err := h.broker.ExtendLease(qname, ids...)

|

||||||

|

if err != nil {

|

||||||

|

h.logger.Errorf("Failed to extend lease for tasks %v: %v", ids, err)

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

for _, id := range ids {

|

||||||

|

if l := h.workers[id].lease; !l.Reset(expirationTime) {

|

||||||

|

h.logger.Warnf("Lease reset failed for %s; lease deadline: %v", id, l.Deadline())

|

||||||

|

}

|

||||||

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

@@ -5,6 +5,7 @@

|

|||||||

package asynq

|

package asynq

|

||||||

|

|

||||||

import (

|

import (

|

||||||

|

"context"

|

||||||

"sync"

|

"sync"

|

||||||

"testing"

|

"testing"

|

||||||

"time"

|

"time"

|

||||||

@@ -15,21 +16,143 @@ import (

|

|||||||

"github.com/hibiken/asynq/internal/base"

|

"github.com/hibiken/asynq/internal/base"

|

||||||

"github.com/hibiken/asynq/internal/rdb"

|

"github.com/hibiken/asynq/internal/rdb"

|

||||||

"github.com/hibiken/asynq/internal/testbroker"

|

"github.com/hibiken/asynq/internal/testbroker"

|

||||||

|

"github.com/hibiken/asynq/internal/timeutil"

|

||||||

)

|

)

|

||||||

|

|

||||||

|

// Test goes through a few phases.

|

||||||

|

//

|

||||||

|

// Phase1: Simulate Server startup; Simulate starting tasks listed in startedWorkers

|

||||||

|

// Phase2: Simluate finishing tasks listed in finishedTasks

|

||||||

|

// Phase3: Simulate Server shutdown;

|

||||||

func TestHeartbeater(t *testing.T) {

|

func TestHeartbeater(t *testing.T) {

|

||||||

r := setup(t)

|

r := setup(t)

|

||||||

defer r.Close()

|

defer r.Close()

|

||||||

rdbClient := rdb.NewRDB(r)

|

rdbClient := rdb.NewRDB(r)

|

||||||

|

|

||||||

|

now := time.Now()

|

||||||

|

const elapsedTime = 10 * time.Second // simulated time elapsed between phase1 and phase2

|

||||||

|

|

||||||

|

clock := timeutil.NewSimulatedClock(time.Time{}) // time will be set in each test

|

||||||

|

|

||||||

|

t1 := h.NewTaskMessageWithQueue("task1", nil, "default")

|

||||||

|

t2 := h.NewTaskMessageWithQueue("task2", nil, "default")

|

||||||

|

t3 := h.NewTaskMessageWithQueue("task3", nil, "default")

|

||||||

|

t4 := h.NewTaskMessageWithQueue("task4", nil, "custom")

|

||||||

|

t5 := h.NewTaskMessageWithQueue("task5", nil, "custom")

|

||||||

|

t6 := h.NewTaskMessageWithQueue("task6", nil, "default")

|

||||||

|

|

||||||

|

// Note: intentionally set to time less than now.Add(rdb.LeaseDuration) to test lease extention is working.

|

||||||

|

lease1 := h.NewLeaseWithClock(now.Add(10*time.Second), clock)

|

||||||

|

lease2 := h.NewLeaseWithClock(now.Add(10*time.Second), clock)

|

||||||

|

lease3 := h.NewLeaseWithClock(now.Add(10*time.Second), clock)

|

||||||

|

lease4 := h.NewLeaseWithClock(now.Add(10*time.Second), clock)

|

||||||

|

lease5 := h.NewLeaseWithClock(now.Add(10*time.Second), clock)

|

||||||

|

lease6 := h.NewLeaseWithClock(now.Add(10*time.Second), clock)

|

||||||

|

|

||||||

tests := []struct {

|

tests := []struct {

|

||||||

|

desc string

|

||||||

|

|

||||||

|

// Interval between heartbeats.

|

||||||

interval time.Duration

|

interval time.Duration

|

||||||

|

|

||||||

|

// Server info.

|

||||||

host string

|

host string

|

||||||

pid int

|

pid int

|

||||||

queues map[string]int

|

queues map[string]int

|

||||||

concurrency int

|

concurrency int

|

||||||

|

|

||||||

|

active map[string][]*base.TaskMessage // initial active set state

|

||||||

|

lease map[string][]base.Z // initial lease set state

|

||||||

|

wantLease1 map[string][]base.Z // expected lease set state after starting all startedWorkers

|

||||||

|

wantLease2 map[string][]base.Z // expected lease set state after finishing all finishedTasks

|

||||||

|

startedWorkers []*workerInfo // workerInfo to send via the started channel

|

||||||

|

finishedTasks []*base.TaskMessage // tasks to send via the finished channel

|

||||||

|

|

||||||

|

startTime time.Time // simulated start time

|

||||||

|

elapsedTime time.Duration // simulated time elapsed between starting and finishing processing tasks

|

||||||

}{

|

}{

|

||||||

{2 * time.Second, "localhost", 45678, map[string]int{"default": 1}, 10},

|

{

|

||||||

|

desc: "With single queue",

|

||||||

|

interval: 2 * time.Second,

|

||||||

|

host: "localhost",

|

||||||

|

pid: 45678,

|

||||||

|

queues: map[string]int{"default": 1},

|

||||||

|

concurrency: 10,

|

||||||

|

active: map[string][]*base.TaskMessage{

|

||||||

|

"default": {t1, t2, t3},

|

||||||

|

},

|

||||||

|

lease: map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

{Message: t1, Score: now.Add(10 * time.Second).Unix()},

|

||||||

|

{Message: t2, Score: now.Add(10 * time.Second).Unix()},

|

||||||

|

{Message: t3, Score: now.Add(10 * time.Second).Unix()},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

startedWorkers: []*workerInfo{

|

||||||

|

{msg: t1, started: now, deadline: now.Add(2 * time.Minute), lease: lease1},

|

||||||

|

{msg: t2, started: now, deadline: now.Add(2 * time.Minute), lease: lease2},

|

||||||

|

{msg: t3, started: now, deadline: now.Add(2 * time.Minute), lease: lease3},

|

||||||

|

},

|

||||||

|

finishedTasks: []*base.TaskMessage{t1, t2},

|

||||||

|

wantLease1: map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

{Message: t1, Score: now.Add(rdb.LeaseDuration).Unix()},

|

||||||

|

{Message: t2, Score: now.Add(rdb.LeaseDuration).Unix()},

|

||||||

|

{Message: t3, Score: now.Add(rdb.LeaseDuration).Unix()},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

wantLease2: map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

{Message: t3, Score: now.Add(elapsedTime).Add(rdb.LeaseDuration).Unix()},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

startTime: now,

|

||||||

|

elapsedTime: elapsedTime,

|

||||||

|

},

|

||||||

|

{

|

||||||

|

desc: "With multiple queue",

|

||||||

|

interval: 2 * time.Second,

|

||||||

|

host: "localhost",

|

||||||

|

pid: 45678,

|

||||||

|

queues: map[string]int{"default": 1, "custom": 2},

|

||||||

|

concurrency: 10,

|

||||||

|

active: map[string][]*base.TaskMessage{

|

||||||

|

"default": {t6},

|

||||||

|

"custom": {t4, t5},

|

||||||

|

},

|

||||||

|

lease: map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

{Message: t6, Score: now.Add(10 * time.Second).Unix()},

|

||||||

|

},

|

||||||

|

"custom": {

|

||||||

|

{Message: t4, Score: now.Add(10 * time.Second).Unix()},

|

||||||

|

{Message: t5, Score: now.Add(10 * time.Second).Unix()},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

startedWorkers: []*workerInfo{

|

||||||

|

{msg: t6, started: now, deadline: now.Add(2 * time.Minute), lease: lease6},

|

||||||

|

{msg: t4, started: now, deadline: now.Add(2 * time.Minute), lease: lease4},

|

||||||

|

{msg: t5, started: now, deadline: now.Add(2 * time.Minute), lease: lease5},

|

||||||

|

},

|

||||||

|

finishedTasks: []*base.TaskMessage{t6, t5},

|

||||||

|

wantLease1: map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

{Message: t6, Score: now.Add(rdb.LeaseDuration).Unix()},

|

||||||

|

},

|

||||||

|

"custom": {

|

||||||

|

{Message: t4, Score: now.Add(rdb.LeaseDuration).Unix()},

|

||||||

|

{Message: t5, Score: now.Add(rdb.LeaseDuration).Unix()},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

wantLease2: map[string][]base.Z{

|

||||||

|

"default": {},

|

||||||

|

"custom": {

|

||||||

|

{Message: t4, Score: now.Add(elapsedTime).Add(rdb.LeaseDuration).Unix()},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

startTime: now,

|

||||||

|

elapsedTime: elapsedTime,

|

||||||

|

},

|

||||||

}

|

}

|

||||||

|

|

||||||

timeCmpOpt := cmpopts.EquateApproxTime(10 * time.Millisecond)

|

timeCmpOpt := cmpopts.EquateApproxTime(10 * time.Millisecond)

|

||||||

@@ -37,8 +160,15 @@ func TestHeartbeater(t *testing.T) {

|

|||||||

ignoreFieldOpt := cmpopts.IgnoreFields(base.ServerInfo{}, "ServerID")

|

ignoreFieldOpt := cmpopts.IgnoreFields(base.ServerInfo{}, "ServerID")

|

||||||

for _, tc := range tests {

|

for _, tc := range tests {

|

||||||

h.FlushDB(t, r)

|

h.FlushDB(t, r)

|

||||||

|

h.SeedAllActiveQueues(t, r, tc.active)

|

||||||

|

h.SeedAllLease(t, r, tc.lease)

|

||||||

|

|

||||||

state := base.NewServerState()

|

clock.SetTime(tc.startTime)

|

||||||

|

rdbClient.SetClock(clock)

|

||||||

|

|

||||||

|

srvState := &serverState{}

|

||||||

|

startingCh := make(chan *workerInfo)

|

||||||

|

finishedCh := make(chan *base.TaskMessage)

|

||||||

hb := newHeartbeater(heartbeaterParams{

|

hb := newHeartbeater(heartbeaterParams{

|

||||||

logger: testLogger,

|

logger: testLogger,

|

||||||

broker: rdbClient,

|

broker: rdbClient,

|

||||||

@@ -46,72 +176,134 @@ func TestHeartbeater(t *testing.T) {

|

|||||||

concurrency: tc.concurrency,

|

concurrency: tc.concurrency,

|

||||||

queues: tc.queues,

|

queues: tc.queues,

|

||||||

strictPriority: false,

|

strictPriority: false,

|

||||||

state: state,

|

state: srvState,

|

||||||

starting: make(chan *workerInfo),

|

starting: startingCh,

|

||||||

finished: make(chan *base.TaskMessage),

|

finished: finishedCh,

|

||||||

})

|

})

|

||||||

|

hb.clock = clock

|

||||||

|

|

||||||

// Change host and pid fields for testing purpose.

|

// Change host and pid fields for testing purpose.

|

||||||

hb.host = tc.host

|

hb.host = tc.host

|

||||||

hb.pid = tc.pid

|

hb.pid = tc.pid

|

||||||

|

|

||||||

state.Set(base.StateActive)

|

//===================

|

||||||

|

// Start Phase1

|

||||||

|

//===================

|

||||||

|

|

||||||

|

srvState.mu.Lock()

|

||||||

|

srvState.value = srvStateActive // simulating Server.Start

|

||||||

|

srvState.mu.Unlock()

|

||||||

|

|

||||||

var wg sync.WaitGroup

|

var wg sync.WaitGroup

|

||||||

hb.start(&wg)

|

hb.start(&wg)

|

||||||

|

|

||||||

want := &base.ServerInfo{

|

// Simulate processor starting to work on tasks.

|

||||||

|

for _, w := range tc.startedWorkers {

|

||||||

|

startingCh <- w

|

||||||

|

}

|

||||||

|

|

||||||

|

// Wait for heartbeater to write to redis

|

||||||

|

time.Sleep(tc.interval * 2)

|

||||||

|

|

||||||

|

ss, err := rdbClient.ListServers()

|

||||||

|

if err != nil {

|

||||||

|

t.Errorf("%s: could not read server info from redis: %v", tc.desc, err)

|

||||||

|

hb.shutdown()

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

|

||||||

|

if len(ss) != 1 {

|

||||||

|

t.Errorf("%s: (*RDB).ListServers returned %d server info, want 1", tc.desc, len(ss))

|

||||||

|

hb.shutdown()

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

|

||||||

|

wantInfo := &base.ServerInfo{

|

||||||

Host: tc.host,

|

Host: tc.host,

|

||||||

PID: tc.pid,

|

PID: tc.pid,

|

||||||

Queues: tc.queues,

|

Queues: tc.queues,

|

||||||

Concurrency: tc.concurrency,

|

Concurrency: tc.concurrency,

|

||||||

Started: time.Now(),

|

Started: now,

|

||||||

Status: "active",

|

Status: "active",

|

||||||

|

ActiveWorkerCount: len(tc.startedWorkers),

|

||||||

}

|

}

|

||||||

|

if diff := cmp.Diff(wantInfo, ss[0], timeCmpOpt, ignoreOpt, ignoreFieldOpt); diff != "" {

|

||||||

// allow for heartbeater to write to redis

|

t.Errorf("%s: redis stored server status %+v, want %+v; (-want, +got)\n%s", tc.desc, ss[0], wantInfo, diff)

|

||||||

time.Sleep(tc.interval)

|

|

||||||

|

|

||||||

ss, err := rdbClient.ListServers()

|

|

||||||

if err != nil {

|

|

||||||

t.Errorf("could not read server info from redis: %v", err)

|

|

||||||

hb.shutdown()

|

hb.shutdown()

|

||||||

continue

|

continue

|

||||||

}

|

}

|

||||||

|

|

||||||

if len(ss) != 1 {

|

for qname, wantLease := range tc.wantLease1 {

|

||||||

t.Errorf("(*RDB).ListServers returned %d process info, want 1", len(ss))

|

gotLease := h.GetLeaseEntries(t, r, qname)

|

||||||

hb.shutdown()

|

if diff := cmp.Diff(wantLease, gotLease, h.SortZSetEntryOpt); diff != "" {

|

||||||

continue

|

t.Errorf("%s: mismatch found in %q: (-want,+got):\n%s", tc.desc, base.LeaseKey(qname), diff)

|

||||||

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

if diff := cmp.Diff(want, ss[0], timeCmpOpt, ignoreOpt, ignoreFieldOpt); diff != "" {

|

for _, w := range tc.startedWorkers {

|

||||||

t.Errorf("redis stored process status %+v, want %+v; (-want, +got)\n%s", ss[0], want, diff)

|

if want := now.Add(rdb.LeaseDuration); w.lease.Deadline() != want {

|

||||||

hb.shutdown()

|

t.Errorf("%s: lease deadline for %v is set to %v, want %v", tc.desc, w.msg, w.lease.Deadline(), want)

|

||||||

continue

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

// status change

|

//===================

|

||||||

state.Set(base.StateClosed)

|

// Start Phase2

|

||||||

|

//===================

|

||||||

|

|

||||||

// allow for heartbeater to write to redis

|

clock.AdvanceTime(tc.elapsedTime)

|

||||||

|

// Simulate processor finished processing tasks.

|

||||||

|

for _, msg := range tc.finishedTasks {

|

||||||

|

if err := rdbClient.Done(context.Background(), msg); err != nil {

|

||||||

|

t.Fatalf("RDB.Done failed: %v", err)

|

||||||

|

}

|

||||||

|

finishedCh <- msg

|

||||||

|

}

|

||||||

|

// Wait for heartbeater to write to redis

|

||||||

time.Sleep(tc.interval * 2)

|

time.Sleep(tc.interval * 2)

|

||||||

|

|

||||||

want.Status = "closed"

|

for qname, wantLease := range tc.wantLease2 {

|

||||||

|

gotLease := h.GetLeaseEntries(t, r, qname)

|

||||||

|

if diff := cmp.Diff(wantLease, gotLease, h.SortZSetEntryOpt); diff != "" {

|

||||||

|

t.Errorf("%s: mismatch found in %q: (-want,+got):\n%s", tc.desc, base.LeaseKey(qname), diff)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

//===================

|

||||||

|

// Start Phase3

|

||||||

|

//===================

|

||||||

|

|

||||||

|

// Server state change; simulating Server.Shutdown

|

||||||

|

srvState.mu.Lock()

|

||||||

|

srvState.value = srvStateClosed

|

||||||

|

srvState.mu.Unlock()

|

||||||

|

|

||||||

|

// Wait for heartbeater to write to redis

|

||||||

|

time.Sleep(tc.interval * 2)

|

||||||

|

|

||||||

|

wantInfo = &base.ServerInfo{

|

||||||

|

Host: tc.host,

|

||||||

|

PID: tc.pid,

|

||||||

|

Queues: tc.queues,

|

||||||

|

Concurrency: tc.concurrency,

|

||||||

|

Started: now,

|

||||||

|

Status: "closed",

|

||||||

|

ActiveWorkerCount: len(tc.startedWorkers) - len(tc.finishedTasks),

|

||||||

|

}

|

||||||

ss, err = rdbClient.ListServers()

|

ss, err = rdbClient.ListServers()

|

||||||

if err != nil {

|

if err != nil {

|

||||||

t.Errorf("could not read process status from redis: %v", err)

|

t.Errorf("%s: could not read server status from redis: %v", tc.desc, err)

|

||||||

hb.shutdown()

|

hb.shutdown()

|

||||||

continue

|

continue

|

||||||

}

|

}

|

||||||

|

|

||||||

if len(ss) != 1 {

|

if len(ss) != 1 {

|

||||||

t.Errorf("(*RDB).ListProcesses returned %d process info, want 1", len(ss))

|

t.Errorf("%s: (*RDB).ListServers returned %d server info, want 1", tc.desc, len(ss))

|

||||||

hb.shutdown()

|

hb.shutdown()

|

||||||

continue

|

continue

|

||||||

}

|

}

|

||||||

|

|

||||||

if diff := cmp.Diff(want, ss[0], timeCmpOpt, ignoreOpt, ignoreFieldOpt); diff != "" {

|

if diff := cmp.Diff(wantInfo, ss[0], timeCmpOpt, ignoreOpt, ignoreFieldOpt); diff != "" {

|

||||||

t.Errorf("redis stored process status %+v, want %+v; (-want, +got)\n%s", ss[0], want, diff)

|

t.Errorf("%s: redis stored process status %+v, want %+v; (-want, +got)\n%s", tc.desc, ss[0], wantInfo, diff)

|

||||||

hb.shutdown()

|

hb.shutdown()

|

||||||

continue

|

continue

|

||||||

}

|

}

|

||||||

@@ -131,8 +323,7 @@ func TestHeartbeaterWithRedisDown(t *testing.T) {

|

|||||||

r := rdb.NewRDB(setup(t))

|

r := rdb.NewRDB(setup(t))

|

||||||

defer r.Close()

|

defer r.Close()

|

||||||

testBroker := testbroker.NewTestBroker(r)

|

testBroker := testbroker.NewTestBroker(r)

|

||||||

state := base.NewServerState()

|

state := &serverState{value: srvStateActive}

|

||||||

state.Set(base.StateActive)

|

|

||||||

hb := newHeartbeater(heartbeaterParams{

|

hb := newHeartbeater(heartbeaterParams{

|

||||||

logger: testLogger,

|

logger: testLogger,

|

||||||

broker: testBroker,

|

broker: testBroker,

|

||||||

|

|||||||

49

inspector.go

49

inspector.go

@@ -52,6 +52,9 @@ type QueueInfo struct {

|

|||||||

// It is an approximate memory usage value in bytes since the value is computed by sampling.

|

// It is an approximate memory usage value in bytes since the value is computed by sampling.

|

||||||

MemoryUsage int64

|

MemoryUsage int64

|

||||||

|

|

||||||

|

// Latency of the queue, measured by the oldest pending task in the queue.

|

||||||

|

Latency time.Duration

|

||||||

|

|

||||||

// Size is the total number of tasks in the queue.

|

// Size is the total number of tasks in the queue.

|

||||||

// The value is the sum of Pending, Active, Scheduled, Retry, and Archived.

|

// The value is the sum of Pending, Active, Scheduled, Retry, and Archived.

|

||||||

Size int

|

Size int

|

||||||

@@ -69,12 +72,17 @@ type QueueInfo struct {

|

|||||||

// Number of stored completed tasks.

|

// Number of stored completed tasks.

|

||||||

Completed int

|

Completed int

|

||||||

|

|

||||||

// Total number of tasks being processed during the given date.

|

// Total number of tasks being processed within the given date (counter resets daily).

|

||||||

// The number includes both succeeded and failed tasks.

|

// The number includes both succeeded and failed tasks.

|

||||||

Processed int

|

Processed int

|

||||||

// Total number of tasks failed to be processed during the given date.

|

// Total number of tasks failed to be processed within the given date (counter resets daily).

|

||||||

Failed int

|

Failed int

|

||||||

|

|

||||||

|

// Total number of tasks processed (cumulative).

|

||||||

|

ProcessedTotal int

|

||||||

|

// Total number of tasks failed (cumulative).

|

||||||

|

FailedTotal int

|

||||||

|

|

||||||

// Paused indicates whether the queue is paused.

|

// Paused indicates whether the queue is paused.

|

||||||

// If true, tasks in the queue will not be processed.

|

// If true, tasks in the queue will not be processed.

|

||||||

Paused bool

|

Paused bool

|

||||||

@@ -95,6 +103,7 @@ func (i *Inspector) GetQueueInfo(qname string) (*QueueInfo, error) {

|

|||||||

return &QueueInfo{

|

return &QueueInfo{

|

||||||

Queue: stats.Queue,

|

Queue: stats.Queue,

|

||||||

MemoryUsage: stats.MemoryUsage,

|

MemoryUsage: stats.MemoryUsage,

|

||||||

|

Latency: stats.Latency,

|

||||||

Size: stats.Size,

|

Size: stats.Size,

|

||||||

Pending: stats.Pending,

|

Pending: stats.Pending,

|

||||||

Active: stats.Active,

|

Active: stats.Active,

|

||||||

@@ -104,6 +113,8 @@ func (i *Inspector) GetQueueInfo(qname string) (*QueueInfo, error) {

|

|||||||

Completed: stats.Completed,

|

Completed: stats.Completed,

|

||||||

Processed: stats.Processed,

|

Processed: stats.Processed,

|

||||||

Failed: stats.Failed,

|

Failed: stats.Failed,

|

||||||

|

ProcessedTotal: stats.ProcessedTotal,

|

||||||

|

FailedTotal: stats.FailedTotal,

|

||||||

Paused: stats.Paused,

|

Paused: stats.Paused,

|

||||||

Timestamp: stats.Timestamp,

|

Timestamp: stats.Timestamp,

|

||||||

}, nil

|

}, nil

|

||||||

@@ -177,8 +188,8 @@ func (i *Inspector) DeleteQueue(qname string, force bool) error {

|

|||||||

|

|

||||||

// GetTaskInfo retrieves task information given a task id and queue name.

|

// GetTaskInfo retrieves task information given a task id and queue name.

|

||||||

//

|

//

|

||||||

// Returns ErrQueueNotFound if a queue with the given name doesn't exist.

|

// Returns an error wrapping ErrQueueNotFound if a queue with the given name doesn't exist.

|

||||||

// Returns ErrTaskNotFound if a task with the given id doesn't exist in the queue.

|

// Returns an error wrapping ErrTaskNotFound if a task with the given id doesn't exist in the queue.

|

||||||

func (i *Inspector) GetTaskInfo(qname, id string) (*TaskInfo, error) {

|

func (i *Inspector) GetTaskInfo(qname, id string) (*TaskInfo, error) {

|

||||||

info, err := i.rdb.GetTaskInfo(qname, id)

|

info, err := i.rdb.GetTaskInfo(qname, id)

|

||||||

switch {

|

switch {

|

||||||

@@ -297,16 +308,28 @@ func (i *Inspector) ListActiveTasks(qname string, opts ...ListOption) ([]*TaskIn

|

|||||||

case err != nil:

|

case err != nil:

|

||||||

return nil, fmt.Errorf("asynq: %v", err)

|

return nil, fmt.Errorf("asynq: %v", err)

|

||||||

}

|

}

|

||||||

|

expired, err := i.rdb.ListLeaseExpired(time.Now(), qname)

|

||||||

|

if err != nil {

|

||||||

|

return nil, fmt.Errorf("asynq: %v", err)

|

||||||

|

}

|

||||||

|

expiredSet := make(map[string]struct{}) // set of expired message IDs

|

||||||

|

for _, msg := range expired {

|

||||||

|

expiredSet[msg.ID] = struct{}{}

|

||||||

|

}

|

||||||

var tasks []*TaskInfo

|

var tasks []*TaskInfo

|

||||||

for _, i := range infos {

|

for _, i := range infos {

|

||||||

tasks = append(tasks, newTaskInfo(

|

t := newTaskInfo(

|

||||||

i.Message,

|

i.Message,

|

||||||

i.State,

|

i.State,

|

||||||

i.NextProcessAt,

|

i.NextProcessAt,

|

||||||

i.Result,

|

i.Result,

|

||||||

))

|

)

|

||||||

|

if _, ok := expiredSet[i.Message.ID]; ok {

|

||||||

|

t.IsOrphaned = true

|

||||||

}

|

}

|

||||||

return tasks, err

|

tasks = append(tasks, t)

|

||||||

|

}

|

||||||

|

return tasks, nil

|

||||||

}

|

}

|

||||||

|

|

||||||

// ListScheduledTasks retrieves scheduled tasks from the specified queue.

|

// ListScheduledTasks retrieves scheduled tasks from the specified queue.

|

||||||

@@ -479,8 +502,8 @@ func (i *Inspector) DeleteAllCompletedTasks(qname string) (int, error) {

|

|||||||

// The task needs to be in pending, scheduled, retry, or archived state,

|

// The task needs to be in pending, scheduled, retry, or archived state,

|

||||||

// otherwise DeleteTask will return an error.

|

// otherwise DeleteTask will return an error.

|

||||||

//

|

//

|

||||||

// If a queue with the given name doesn't exist, it returns ErrQueueNotFound.

|

// If a queue with the given name doesn't exist, it returns an error wrapping ErrQueueNotFound.

|

||||||

// If a task with the given id doesn't exist in the queue, it returns ErrTaskNotFound.

|

// If a task with the given id doesn't exist in the queue, it returns an error wrapping ErrTaskNotFound.

|

||||||

// If the task is in active state, it returns a non-nil error.

|

// If the task is in active state, it returns a non-nil error.

|

||||||

func (i *Inspector) DeleteTask(qname, id string) error {

|

func (i *Inspector) DeleteTask(qname, id string) error {

|

||||||

if err := base.ValidateQueueName(qname); err != nil {

|

if err := base.ValidateQueueName(qname); err != nil {

|

||||||

@@ -533,8 +556,8 @@ func (i *Inspector) RunAllArchivedTasks(qname string) (int, error) {

|

|||||||

// The task needs to be in scheduled, retry, or archived state, otherwise RunTask

|

// The task needs to be in scheduled, retry, or archived state, otherwise RunTask

|

||||||

// will return an error.

|

// will return an error.

|

||||||

//

|

//

|

||||||

// If a queue with the given name doesn't exist, it returns ErrQueueNotFound.

|

// If a queue with the given name doesn't exist, it returns an error wrapping ErrQueueNotFound.

|

||||||

// If a task with the given id doesn't exist in the queue, it returns ErrTaskNotFound.

|

// If a task with the given id doesn't exist in the queue, it returns an error wrapping ErrTaskNotFound.

|

||||||

// If the task is in pending or active state, it returns a non-nil error.

|

// If the task is in pending or active state, it returns a non-nil error.

|

||||||

func (i *Inspector) RunTask(qname, id string) error {

|

func (i *Inspector) RunTask(qname, id string) error {

|

||||||

if err := base.ValidateQueueName(qname); err != nil {

|

if err := base.ValidateQueueName(qname); err != nil {

|

||||||

@@ -586,8 +609,8 @@ func (i *Inspector) ArchiveAllRetryTasks(qname string) (int, error) {

|

|||||||

// The task needs to be in pending, scheduled, or retry state, otherwise ArchiveTask

|

// The task needs to be in pending, scheduled, or retry state, otherwise ArchiveTask

|

||||||

// will return an error.

|

// will return an error.

|

||||||

//