mirror of

https://github.com/hibiken/asynq.git

synced 2025-10-20 09:16:12 +08:00

Compare commits

227 Commits

v0.19.1

...

sohail/pm-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f1e7dc4056 | ||

|

|

ee17997650 | ||

|

|

106c07adaa | ||

|

|

1c7195ff1a | ||

|

|

12cbba4926 | ||

|

|

80479b528d | ||

|

|

e14c312fe3 | ||

|

|

ad1f587403 | ||

|

|

8b32b38fd5 | ||

|

|

96a84fac0c | ||

|

|

d2c207fbb8 | ||

|

|

1a7c61ac49 | ||

|

|

87375b5534 | ||

|

|

ffd75ebb5f | ||

|

|

d64fd328cb | ||

|

|

4f00f52c1d | ||

|

|

580d69e88f | ||

|

|

c97652d408 | ||

|

|

4644d37ef4 | ||

|

|

45c0fc6ad9 | ||

|

|

fd3eb86d95 | ||

|

|

3dbda60333 | ||

|

|

02c6dae7eb | ||

|

|

5cfcb71139 | ||

|

|

c78e7b0ccd | ||

|

|

b4db174032 | ||

|

|

39f1d8c3e6 | ||

|

|

e70de721b8 | ||

|

|

6c06ad7e45 | ||

|

|

a676d3d2fa | ||

|

|

ef0d32965f | ||

|

|

f16f9ac440 | ||

|

|

63f7cb7b17 | ||

|

|

04b3a3475d | ||

|

|

013190b824 | ||

|

|

1e102a5392 | ||

|

|

e1a8a366a6 | ||

|

|

c8c8adfaa6 | ||

|

|

03f4799712 | ||

|

|

3f4e211a3b | ||

|

|

0655c569f5 | ||

|

|

95a0768ae0 | ||

|

|

f4b56498f2 | ||

|

|

ae478d5b22 | ||

|

|

ff7ef48463 | ||

|

|

b1e13893ff | ||

|

|

0dc670d7d8 | ||

|

|

461d922616 | ||

|

|

5daa3c52ed | ||

|

|

d04888e748 | ||

|

|

174008843d | ||

|

|

2b632b93d5 | ||

|

|

b35b559d40 | ||

|

|

8df0bfa583 | ||

|

|

b25d10b61d | ||

|

|

38f7499b71 | ||

|

|

0a73fc6201 | ||

|

|

1a11a33b4f | ||

|

|

f0888df813 | ||

|

|

c2dd648a51 | ||

|

|

a3cca853a0 | ||

|

|

83df622a92 | ||

|

|

fdbf54eb04 | ||

|

|

16ec43cbca | ||

|

|

1e0bf88bf3 | ||

|

|

d0041c55a3 | ||

|

|

7ef0511f35 | ||

|

|

1ec90810db | ||

|

|

90188a093d | ||

|

|

e05f0b7196 | ||

|

|

c1096a0fae | ||

|

|

9e548fc097 | ||

|

|

6a7bf2ceff | ||

|

|

e7fa0ae865 | ||

|

|

fc4b6713f6 | ||

|

|

6b98c0bbae | ||

|

|

ed1ab8ee55 | ||

|

|

e18c0381ad | ||

|

|

8b422c237c | ||

|

|

e6f74c1c2b | ||

|

|

6edba6994e | ||

|

|

571f0d2613 | ||

|

|

2165ed133b | ||

|

|

551b0c7119 | ||

|

|

123d560a44 | ||

|

|

5bef53d1ac | ||

|

|

90af7749ca | ||

|

|

e4b8663154 | ||

|

|

fde294be32 | ||

|

|

cbb1be34ac | ||

|

|

6ed70adf3b | ||

|

|

1f42d71e9b | ||

|

|

f966a6c3b8 | ||

|

|

8b057b8767 | ||

|

|

c72bfef094 | ||

|

|

dffb78cca4 | ||

|

|

0275df8df4 | ||

|

|

cc777ebdaa | ||

|

|

783071c47f | ||

|

|

bafed907e9 | ||

|

|

0b8cfad703 | ||

|

|

c08f142b56 | ||

|

|

c70ff6a335 | ||

|

|

a04ba6411d | ||

|

|

d0209d9273 | ||

|

|

6c954c87bf | ||

|

|

86fe31990b | ||

|

|

e0e5d1ac24 | ||

|

|

30d409371b | ||

|

|

aefd276146 | ||

|

|

94ad9e5e74 | ||

|

|

5187844ca5 | ||

|

|

4dd2b5738a | ||

|

|

9116c096ec | ||

|

|

5c723f597e | ||

|

|

dd6f84c575 | ||

|

|

c438339c3d | ||

|

|

901938b0fe | ||

|

|

245d4fe663 | ||

|

|

94719e325c | ||

|

|

8b2a787759 | ||

|

|

451be7e50f | ||

|

|

578321f226 | ||

|

|

2c783566f3 | ||

|

|

39718f8bea | ||

|

|

829f64fd38 | ||

|

|

a369443955 | ||

|

|

de139cc18e | ||

|

|

74db013ab9 | ||

|

|

725105ca03 | ||

|

|

d8f31e45f1 | ||

|

|

9023cbf4be | ||

|

|

9279c09125 | ||

|

|

bc27126670 | ||

|

|

0cfa7f47ba | ||

|

|

8a4fb71dd5 | ||

|

|

7fb5b25944 | ||

|

|

71bd8f0535 | ||

|

|

4c8432e0ce | ||

|

|

e939b5d166 | ||

|

|

1acd62c760 | ||

|

|

0149396bae | ||

|

|

45ed560708 | ||

|

|

01eeb8756e | ||

|

|

47af17cfb4 | ||

|

|

eb064c2bab | ||

|

|

652939dd3a | ||

|

|

efe3c74037 | ||

|

|

74d2eea4e0 | ||

|

|

60a4dc1401 | ||

|

|

4b716780ef | ||

|

|

e63f41fb24 | ||

|

|

1c388baf06 | ||

|

|

47a66231b3 | ||

|

|

3551d3334c | ||

|

|

8b16ede8bc | ||

|

|

c8658a53e6 | ||

|

|

562506c7ba | ||

|

|

888b5590fb | ||

|

|

196db64d4d | ||

|

|

4b35eb0e1a | ||

|

|

b29fe58434 | ||

|

|

7849b1114c | ||

|

|

99c00bffeb | ||

|

|

4542b52da8 | ||

|

|

d841dc2f8d | ||

|

|

ab28234767 | ||

|

|

eb27b0fe1e | ||

|

|

088be63ee4 | ||

|

|

ed69667e86 | ||

|

|

4e8885276c | ||

|

|

401f7fb4fe | ||

|

|

61854ea1dc | ||

|

|

f17c157b0f | ||

|

|

8b582899ad | ||

|

|

e3d2939a4c | ||

|

|

2ce71e83b0 | ||

|

|

1608366032 | ||

|

|

3f4f0c1daa | ||

|

|

f94a65dc9f | ||

|

|

04d7c8c38c | ||

|

|

c04fd41653 | ||

|

|

7e5efb0e30 | ||

|

|

cabf8d3627 | ||

|

|

a19909f5f4 | ||

|

|

cea5110d15 | ||

|

|

9b63e23274 | ||

|

|

de25201d9f | ||

|

|

ec560afb01 | ||

|

|

d4006894ad | ||

|

|

59927509d8 | ||

|

|

8211167de2 | ||

|

|

d7169cd445 | ||

|

|

dfae8638e1 | ||

|

|

b9943de2ab | ||

|

|

871474f220 | ||

|

|

87dc392c7f | ||

|

|

dabcb120d5 | ||

|

|

bc2f1986d7 | ||

|

|

b8cb579407 | ||

|

|

bca624792c | ||

|

|

d865d89900 | ||

|

|

852af7abd1 | ||

|

|

5490d2c625 | ||

|

|

ebd7a32c0f | ||

|

|

55d0610a03 | ||

|

|

ab8a4f5b1e | ||

|

|

d7ceb0c090 | ||

|

|

8bd70c6f84 | ||

|

|

10ab4e3745 | ||

|

|

349f4c50fb | ||

|

|

dff2e3a336 | ||

|

|

65040af7b5 | ||

|

|

053fe2d1ee | ||

|

|

25832e5e95 | ||

|

|

aa26f3819e | ||

|

|

d94614bb9b | ||

|

|

ce46b07652 | ||

|

|

2d0170541c | ||

|

|

c1f08106da | ||

|

|

74cf804197 | ||

|

|

8dfabfccb3 | ||

|

|

5f20edcbd1 | ||

|

|

1ddb2f7bce | ||

|

|

82d18e3d91 | ||

|

|

43cb4ddf19 | ||

|

|

ddfc6747a1 |

12

.github/FUNDING.yml

vendored

12

.github/FUNDING.yml

vendored

@@ -1,12 +1,4 @@

|

|||||||

# These are supported funding model platforms

|

# These are supported funding model platforms

|

||||||

|

|

||||||

github: [hibiken] # Replace with up to 4 GitHub Sponsors-enabled usernames e.g., [user1, user2]

|

github: [hibiken]

|

||||||

patreon: # Replace with a single Patreon username

|

open_collective: ken-hibino

|

||||||

open_collective: # Replace with a single Open Collective username

|

|

||||||

ko_fi: # Replace with a single Ko-fi username

|

|

||||||

tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

|

|

||||||

community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

|

|

||||||

liberapay: # Replace with a single Liberapay username

|

|

||||||

issuehunt: # Replace with a single IssueHunt username

|

|

||||||

otechie: # Replace with a single Otechie username

|

|

||||||

custom: # Replace with up to 4 custom sponsorship URLs e.g., ['link1', 'link2']

|

|

||||||

|

|||||||

15

.github/ISSUE_TEMPLATE/bug_report.md

vendored

15

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -3,13 +3,20 @@ name: Bug report

|

|||||||

about: Create a report to help us improve

|

about: Create a report to help us improve

|

||||||

title: "[BUG] Description of the bug"

|

title: "[BUG] Description of the bug"

|

||||||

labels: bug

|

labels: bug

|

||||||

assignees: hibiken

|

assignees:

|

||||||

|

- hibiken

|

||||||

|

- kamikazechaser

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

**Describe the bug**

|

**Describe the bug**

|

||||||

A clear and concise description of what the bug is.

|

A clear and concise description of what the bug is.

|

||||||

|

|

||||||

|

**Environment (please complete the following information):**

|

||||||

|

- OS: [e.g. MacOS, Linux]

|

||||||

|

- `asynq` package version [e.g. v0.25.0]

|

||||||

|

- Redis/Valkey version

|

||||||

|

|

||||||

**To Reproduce**

|

**To Reproduce**

|

||||||

Steps to reproduce the behavior (Code snippets if applicable):

|

Steps to reproduce the behavior (Code snippets if applicable):

|

||||||

1. Setup background processing ...

|

1. Setup background processing ...

|

||||||

@@ -22,9 +29,5 @@ A clear and concise description of what you expected to happen.

|

|||||||

**Screenshots**

|

**Screenshots**

|

||||||

If applicable, add screenshots to help explain your problem.

|

If applicable, add screenshots to help explain your problem.

|

||||||

|

|

||||||

**Environment (please complete the following information):**

|

|

||||||

- OS: [e.g. MacOS, Linux]

|

|

||||||

- Version of `asynq` package [e.g. v1.0.0]

|

|

||||||

|

|

||||||

**Additional context**

|

**Additional context**

|

||||||

Add any other context about the problem here.

|

Add any other context about the problem here.

|

||||||

|

|||||||

4

.github/ISSUE_TEMPLATE/feature_request.md

vendored

4

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -3,7 +3,9 @@ name: Feature request

|

|||||||

about: Suggest an idea for this project

|

about: Suggest an idea for this project

|

||||||

title: "[FEATURE REQUEST] Description of the feature request"

|

title: "[FEATURE REQUEST] Description of the feature request"

|

||||||

labels: enhancement

|

labels: enhancement

|

||||||

assignees: hibiken

|

assignees:

|

||||||

|

- hibiken

|

||||||

|

- kamikazechaser

|

||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

|

|||||||

24

.github/dependabot.yaml

vendored

Normal file

24

.github/dependabot.yaml

vendored

Normal file

@@ -0,0 +1,24 @@

|

|||||||

|

version: 2

|

||||||

|

updates:

|

||||||

|

- package-ecosystem: "gomod"

|

||||||

|

directory: "/"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

labels:

|

||||||

|

- "pr-deps"

|

||||||

|

- package-ecosystem: "gomod"

|

||||||

|

directory: "/tools"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

labels:

|

||||||

|

- "pr-deps"

|

||||||

|

- package-ecosystem: "gomod"

|

||||||

|

directory: "/x"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

labels:

|

||||||

|

- "pr-deps"

|

||||||

|

- package-ecosystem: "github-actions"

|

||||||

|

directory: "/"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

32

.github/workflows/benchstat.yml

vendored

32

.github/workflows/benchstat.yml

vendored

@@ -11,20 +11,20 @@ jobs:

|

|||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

services:

|

services:

|

||||||

redis:

|

redis:

|

||||||

image: redis

|

image: redis:7

|

||||||

ports:

|

ports:

|

||||||

- 6379:6379

|

- 6379:6379

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout

|

- name: Checkout

|

||||||

uses: actions/checkout@v2

|

uses: actions/checkout@v4

|

||||||

- name: Set up Go

|

- name: Set up Go

|

||||||

uses: actions/setup-go@v2

|

uses: actions/setup-go@v5

|

||||||

with:

|

with:

|

||||||

go-version: 1.16.x

|

go-version: 1.23.x

|

||||||

- name: Benchmark

|

- name: Benchmark

|

||||||

run: go test -run=^$ -bench=. -count=5 -timeout=60m ./... | tee -a new.txt

|

run: go test -run=^$ -bench=. -count=5 -timeout=60m ./... | tee -a new.txt

|

||||||

- name: Upload Benchmark

|

- name: Upload Benchmark

|

||||||

uses: actions/upload-artifact@v2

|

uses: actions/upload-artifact@v4

|

||||||

with:

|

with:

|

||||||

name: bench-incoming

|

name: bench-incoming

|

||||||

path: new.txt

|

path: new.txt

|

||||||

@@ -33,22 +33,22 @@ jobs:

|

|||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

services:

|

services:

|

||||||

redis:

|

redis:

|

||||||

image: redis

|

image: redis:7

|

||||||

ports:

|

ports:

|

||||||

- 6379:6379

|

- 6379:6379

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout

|

- name: Checkout

|

||||||

uses: actions/checkout@v2

|

uses: actions/checkout@v4

|

||||||

with:

|

with:

|

||||||

ref: master

|

ref: master

|

||||||

- name: Set up Go

|

- name: Set up Go

|

||||||

uses: actions/setup-go@v2

|

uses: actions/setup-go@v5

|

||||||

with:

|

with:

|

||||||

go-version: 1.15.x

|

go-version: 1.23.x

|

||||||

- name: Benchmark

|

- name: Benchmark

|

||||||

run: go test -run=^$ -bench=. -count=5 -timeout=60m ./... | tee -a old.txt

|

run: go test -run=^$ -bench=. -count=5 -timeout=60m ./... | tee -a old.txt

|

||||||

- name: Upload Benchmark

|

- name: Upload Benchmark

|

||||||

uses: actions/upload-artifact@v2

|

uses: actions/upload-artifact@v4

|

||||||

with:

|

with:

|

||||||

name: bench-current

|

name: bench-current

|

||||||

path: old.txt

|

path: old.txt

|

||||||

@@ -58,25 +58,25 @@ jobs:

|

|||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

steps:

|

steps:

|

||||||

- name: Checkout

|

- name: Checkout

|

||||||

uses: actions/checkout@v2

|

uses: actions/checkout@v4

|

||||||

- name: Set up Go

|

- name: Set up Go

|

||||||

uses: actions/setup-go@v2

|

uses: actions/setup-go@v5

|

||||||

with:

|

with:

|

||||||

go-version: 1.15.x

|

go-version: 1.23.x

|

||||||

- name: Install benchstat

|

- name: Install benchstat

|

||||||

run: go get -u golang.org/x/perf/cmd/benchstat

|

run: go get -u golang.org/x/perf/cmd/benchstat

|

||||||

- name: Download Incoming

|

- name: Download Incoming

|

||||||

uses: actions/download-artifact@v2

|

uses: actions/download-artifact@v4

|

||||||

with:

|

with:

|

||||||

name: bench-incoming

|

name: bench-incoming

|

||||||

- name: Download Current

|

- name: Download Current

|

||||||

uses: actions/download-artifact@v2

|

uses: actions/download-artifact@v4

|

||||||

with:

|

with:

|

||||||

name: bench-current

|

name: bench-current

|

||||||

- name: Benchstat Results

|

- name: Benchstat Results

|

||||||

run: benchstat old.txt new.txt | tee -a benchstat.txt

|

run: benchstat old.txt new.txt | tee -a benchstat.txt

|

||||||

- name: Upload benchstat results

|

- name: Upload benchstat results

|

||||||

uses: actions/upload-artifact@v2

|

uses: actions/upload-artifact@v4

|

||||||

with:

|

with:

|

||||||

name: benchstat

|

name: benchstat

|

||||||

path: benchstat.txt

|

path: benchstat.txt

|

||||||

|

|||||||

62

.github/workflows/build.yml

vendored

62

.github/workflows/build.yml

vendored

@@ -7,29 +7,77 @@ jobs:

|

|||||||

strategy:

|

strategy:

|

||||||

matrix:

|

matrix:

|

||||||

os: [ubuntu-latest]

|

os: [ubuntu-latest]

|

||||||

go-version: [1.14.x, 1.15.x, 1.16.x, 1.17.x]

|

go-version: [1.22.x, 1.23.x]

|

||||||

runs-on: ${{ matrix.os }}

|

runs-on: ${{ matrix.os }}

|

||||||

services:

|

services:

|

||||||

redis:

|

redis:

|

||||||

image: redis

|

image: redis:7

|

||||||

ports:

|

ports:

|

||||||

- 6379:6379

|

- 6379:6379

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v2

|

- uses: actions/checkout@v4

|

||||||

|

|

||||||

- name: Set up Go

|

- name: Set up Go

|

||||||

uses: actions/setup-go@v2

|

uses: actions/setup-go@v5

|

||||||

with:

|

with:

|

||||||

go-version: ${{ matrix.go-version }}

|

go-version: ${{ matrix.go-version }}

|

||||||

|

cache: false

|

||||||

|

|

||||||

- name: Build

|

- name: Build core module

|

||||||

run: go build -v ./...

|

run: go build -v ./...

|

||||||

|

|

||||||

- name: Test

|

- name: Build x module

|

||||||

|

run: cd x && go build -v ./... && cd ..

|

||||||

|

|

||||||

|

- name: Test core module

|

||||||

run: go test -race -v -coverprofile=coverage.txt -covermode=atomic ./...

|

run: go test -race -v -coverprofile=coverage.txt -covermode=atomic ./...

|

||||||

|

|

||||||

|

- name: Test x module

|

||||||

|

run: cd x && go test -race -v ./... && cd ..

|

||||||

|

|

||||||

- name: Benchmark Test

|

- name: Benchmark Test

|

||||||

run: go test -run=^$ -bench=. -loglevel=debug ./...

|

run: go test -run=^$ -bench=. -loglevel=debug ./...

|

||||||

|

|

||||||

- name: Upload coverage to Codecov

|

- name: Upload coverage to Codecov

|

||||||

uses: codecov/codecov-action@v1

|

uses: codecov/codecov-action@v5

|

||||||

|

|

||||||

|

build-tool:

|

||||||

|

strategy:

|

||||||

|

matrix:

|

||||||

|

os: [ubuntu-latest]

|

||||||

|

go-version: [1.22.x, 1.23.x]

|

||||||

|

runs-on: ${{ matrix.os }}

|

||||||

|

services:

|

||||||

|

redis:

|

||||||

|

image: redis:7

|

||||||

|

ports:

|

||||||

|

- 6379:6379

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v4

|

||||||

|

|

||||||

|

- name: Set up Go

|

||||||

|

uses: actions/setup-go@v5

|

||||||

|

with:

|

||||||

|

go-version: ${{ matrix.go-version }}

|

||||||

|

cache: false

|

||||||

|

|

||||||

|

- name: Build tools module

|

||||||

|

run: cd tools && go build -v ./... && cd ..

|

||||||

|

|

||||||

|

- name: Test tools module

|

||||||

|

run: cd tools && go test -race -v ./... && cd ..

|

||||||

|

|

||||||

|

golangci:

|

||||||

|

name: lint

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v4

|

||||||

|

|

||||||

|

- uses: actions/setup-go@v5

|

||||||

|

with:

|

||||||

|

go-version: stable

|

||||||

|

|

||||||

|

- name: golangci-lint

|

||||||

|

uses: golangci/golangci-lint-action@v6

|

||||||

|

with:

|

||||||

|

version: v1.61

|

||||||

|

|||||||

6

.gitignore

vendored

6

.gitignore

vendored

@@ -1,3 +1,4 @@

|

|||||||

|

vendor

|

||||||

# Binaries for programs and plugins

|

# Binaries for programs and plugins

|

||||||

*.exe

|

*.exe

|

||||||

*.exe~

|

*.exe~

|

||||||

@@ -14,12 +15,13 @@

|

|||||||

# Ignore examples for now

|

# Ignore examples for now

|

||||||

/examples

|

/examples

|

||||||

|

|

||||||

# Ignore command binary

|

# Ignore tool binaries

|

||||||

/tools/asynq/asynq

|

/tools/asynq/asynq

|

||||||

|

/tools/metrics_exporter/metrics_exporter

|

||||||

|

|

||||||

# Ignore asynq config file

|

# Ignore asynq config file

|

||||||

.asynq.*

|

.asynq.*

|

||||||

|

|

||||||

# Ignore editor config files

|

# Ignore editor config files

|

||||||

.vscode

|

.vscode

|

||||||

.idea

|

.idea

|

||||||

|

|||||||

98

CHANGELOG.md

98

CHANGELOG.md

@@ -7,6 +7,98 @@ and this project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0

|

|||||||

|

|

||||||

## [Unreleased]

|

## [Unreleased]

|

||||||

|

|

||||||

|

## [0.25.0] - 2024-10-29

|

||||||

|

|

||||||

|

### Upgrades

|

||||||

|

- Minumum go version is set to 1.22 (PR: https://github.com/hibiken/asynq/pull/925)

|

||||||

|

- Internal protobuf package is upgraded to address security advisories (PR: https://github.com/hibiken/asynq/pull/925)

|

||||||

|

- Most packages are upgraded

|

||||||

|

- CI/CD spec upgraded

|

||||||

|

|

||||||

|

### Added

|

||||||

|

- `IsPanicError` function is introduced to support catching of panic errors when processing tasks (PR: https://github.com/hibiken/asynq/pull/491)

|

||||||

|

- `JanitorInterval` and `JanitorBatchSize` are added as Server options (PR: https://github.com/hibiken/asynq/pull/715)

|

||||||

|

- `NewClientFromRedisClient` is introduced to allow reusing an existing redis client (PR: https://github.com/hibiken/asynq/pull/742)

|

||||||

|

- `TaskCheckInterval` config option is added to specify the interval between checks for new tasks to process when all queues are empty (PR: https://github.com/hibiken/asynq/pull/694)

|

||||||

|

- `Ping` method is added to Client, Server and Scheduler ((PR: https://github.com/hibiken/asynq/pull/585))

|

||||||

|

- `RevokeTask` error type is introduced to prevent a task from being retried or archived (PR: https://github.com/hibiken/asynq/pull/882)

|

||||||

|

- `SentinelUsername` is added as a redis config option (PR: https://github.com/hibiken/asynq/pull/924)

|

||||||

|

- Some jitter is introduced to improve latency when fetching jobs in the processor (PR: https://github.com/hibiken/asynq/pull/868)

|

||||||

|

- Add task enqueue command to the CLI (PR: https://github.com/hibiken/asynq/pull/918)

|

||||||

|

- Add a map cache (concurrent safe) to keep track of queues that ultimately reduces redis load when enqueuing tasks (PR: https://github.com/hibiken/asynq/pull/946)

|

||||||

|

|

||||||

|

### Fixes

|

||||||

|

- Archived tasks that are trimmed should now be deleted (PR: https://github.com/hibiken/asynq/pull/743)

|

||||||

|

- Fix lua script when listing task messages with an expired lease (PR: https://github.com/hibiken/asynq/pull/709)

|

||||||

|

- Fix potential context leaks due to cancellation not being called (PR: https://github.com/hibiken/asynq/pull/926)

|

||||||

|

- Misc documentation fixes

|

||||||

|

- Misc test fixes

|

||||||

|

|

||||||

|

|

||||||

|

## [0.24.1] - 2023-05-01

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

- Updated package version dependency for go-redis

|

||||||

|

|

||||||

|

## [0.24.0] - 2023-01-02

|

||||||

|

|

||||||

|

### Added

|

||||||

|

- `PreEnqueueFunc`, `PostEnqueueFunc` is added in `Scheduler` and deprecated `EnqueueErrorHandler` (PR: https://github.com/hibiken/asynq/pull/476)

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

- Removed error log when `Scheduler` failed to enqueue a task. Use `PostEnqueueFunc` to check for errors and task actions if needed.

|

||||||

|

- Changed log level from ERROR to WARNINING when `Scheduler` failed to record `SchedulerEnqueueEvent`.

|

||||||

|

|

||||||

|

## [0.23.0] - 2022-04-11

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `Group` option is introduced to enqueue task in a group.

|

||||||

|

- `GroupAggregator` and related types are introduced for task aggregation feature.

|

||||||

|

- `GroupGracePeriod`, `GroupMaxSize`, `GroupMaxDelay`, and `GroupAggregator` fields are added to `Config`.

|

||||||

|

- `Inspector` has new methods related to "aggregating tasks".

|

||||||

|

- `Group` field is added to `TaskInfo`.

|

||||||

|

- (CLI): `group ls` command is added

|

||||||

|

- (CLI): `task ls` supports listing aggregating tasks via `--state=aggregating --group=<GROUP>` flags

|

||||||

|

- Enable rediss url parsing support

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixed overflow issue with 32-bit systems (For details, see https://github.com/hibiken/asynq/pull/426)

|

||||||

|

|

||||||

|

## [0.22.1] - 2022-02-20

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixed Redis version compatibility: Keep support for redis v4.0+

|

||||||

|

|

||||||

|

## [0.22.0] - 2022-02-19

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `BaseContext` is introduced in `Config` to specify callback hook to provide a base `context` from which `Handler` `context` is derived

|

||||||

|

- `IsOrphaned` field is added to `TaskInfo` to describe a task left in active state with no worker processing it.

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- `Server` now recovers tasks with an expired lease. Recovered tasks are retried/archived with `ErrLeaseExpired` error.

|

||||||

|

|

||||||

|

## [0.21.0] - 2022-01-22

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `PeriodicTaskManager` is added. Prefer using this over `Scheduler` as it has better support for dynamic periodic tasks.

|

||||||

|

- The `asynq stats` command now supports a `--json` option, making its output a JSON object

|

||||||

|

- Introduced new configuration for `DelayedTaskCheckInterval`. See [godoc](https://godoc.org/github.com/hibiken/asynq) for more details.

|

||||||

|

|

||||||

|

## [0.20.0] - 2021-12-19

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- Package `x/metrics` is added.

|

||||||

|

- Tool `tools/metrics_exporter` binary is added.

|

||||||

|

- `ProcessedTotal` and `FailedTotal` fields were added to `QueueInfo` struct.

|

||||||

|

|

||||||

## [0.19.1] - 2021-12-12

|

## [0.19.1] - 2021-12-12

|

||||||

|

|

||||||

### Added

|

### Added

|

||||||

@@ -231,9 +323,9 @@ Use `ProcessIn` or `ProcessAt` option to schedule a task instead of `EnqueueIn`

|

|||||||

|

|

||||||

#### `Inspector`

|

#### `Inspector`

|

||||||

|

|

||||||

All Inspector methods are scoped to a queue, and the methods take `qname (string)` as the first argument.

|

All Inspector methods are scoped to a queue, and the methods take `qname (string)` as the first argument.

|

||||||

`EnqueuedTask` is renamed to `PendingTask` and its corresponding methods.

|

`EnqueuedTask` is renamed to `PendingTask` and its corresponding methods.

|

||||||

`InProgressTask` is renamed to `ActiveTask` and its corresponding methods.

|

`InProgressTask` is renamed to `ActiveTask` and its corresponding methods.

|

||||||

Command "Enqueue" is replaced by the verb "Run" (e.g. `EnqueueAllScheduledTasks` --> `RunAllScheduledTasks`)

|

Command "Enqueue" is replaced by the verb "Run" (e.g. `EnqueueAllScheduledTasks` --> `RunAllScheduledTasks`)

|

||||||

|

|

||||||

#### `CLI`

|

#### `CLI`

|

||||||

|

|||||||

128

CODE_OF_CONDUCT.md

Normal file

128

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,128 @@

|

|||||||

|

# Contributor Covenant Code of Conduct

|

||||||

|

|

||||||

|

## Our Pledge

|

||||||

|

|

||||||

|

We as members, contributors, and leaders pledge to make participation in our

|

||||||

|

community a harassment-free experience for everyone, regardless of age, body

|

||||||

|

size, visible or invisible disability, ethnicity, sex characteristics, gender

|

||||||

|

identity and expression, level of experience, education, socio-economic status,

|

||||||

|

nationality, personal appearance, race, religion, or sexual identity

|

||||||

|

and orientation.

|

||||||

|

|

||||||

|

We pledge to act and interact in ways that contribute to an open, welcoming,

|

||||||

|

diverse, inclusive, and healthy community.

|

||||||

|

|

||||||

|

## Our Standards

|

||||||

|

|

||||||

|

Examples of behavior that contributes to a positive environment for our

|

||||||

|

community include:

|

||||||

|

|

||||||

|

* Demonstrating empathy and kindness toward other people

|

||||||

|

* Being respectful of differing opinions, viewpoints, and experiences

|

||||||

|

* Giving and gracefully accepting constructive feedback

|

||||||

|

* Accepting responsibility and apologizing to those affected by our mistakes,

|

||||||

|

and learning from the experience

|

||||||

|

* Focusing on what is best not just for us as individuals, but for the

|

||||||

|

overall community

|

||||||

|

|

||||||

|

Examples of unacceptable behavior include:

|

||||||

|

|

||||||

|

* The use of sexualized language or imagery, and sexual attention or

|

||||||

|

advances of any kind

|

||||||

|

* Trolling, insulting or derogatory comments, and personal or political attacks

|

||||||

|

* Public or private harassment

|

||||||

|

* Publishing others' private information, such as a physical or email

|

||||||

|

address, without their explicit permission

|

||||||

|

* Other conduct which could reasonably be considered inappropriate in a

|

||||||

|

professional setting

|

||||||

|

|

||||||

|

## Enforcement Responsibilities

|

||||||

|

|

||||||

|

Community leaders are responsible for clarifying and enforcing our standards of

|

||||||

|

acceptable behavior and will take appropriate and fair corrective action in

|

||||||

|

response to any behavior that they deem inappropriate, threatening, offensive,

|

||||||

|

or harmful.

|

||||||

|

|

||||||

|

Community leaders have the right and responsibility to remove, edit, or reject

|

||||||

|

comments, commits, code, wiki edits, issues, and other contributions that are

|

||||||

|

not aligned to this Code of Conduct, and will communicate reasons for moderation

|

||||||

|

decisions when appropriate.

|

||||||

|

|

||||||

|

## Scope

|

||||||

|

|

||||||

|

This Code of Conduct applies within all community spaces, and also applies when

|

||||||

|

an individual is officially representing the community in public spaces.

|

||||||

|

Examples of representing our community include using an official e-mail address,

|

||||||

|

posting via an official social media account, or acting as an appointed

|

||||||

|

representative at an online or offline event.

|

||||||

|

|

||||||

|

## Enforcement

|

||||||

|

|

||||||

|

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||||

|

reported to the community leaders responsible for enforcement at

|

||||||

|

ken.hibino7@gmail.com.

|

||||||

|

All complaints will be reviewed and investigated promptly and fairly.

|

||||||

|

|

||||||

|

All community leaders are obligated to respect the privacy and security of the

|

||||||

|

reporter of any incident.

|

||||||

|

|

||||||

|

## Enforcement Guidelines

|

||||||

|

|

||||||

|

Community leaders will follow these Community Impact Guidelines in determining

|

||||||

|

the consequences for any action they deem in violation of this Code of Conduct:

|

||||||

|

|

||||||

|

### 1. Correction

|

||||||

|

|

||||||

|

**Community Impact**: Use of inappropriate language or other behavior deemed

|

||||||

|

unprofessional or unwelcome in the community.

|

||||||

|

|

||||||

|

**Consequence**: A private, written warning from community leaders, providing

|

||||||

|

clarity around the nature of the violation and an explanation of why the

|

||||||

|

behavior was inappropriate. A public apology may be requested.

|

||||||

|

|

||||||

|

### 2. Warning

|

||||||

|

|

||||||

|

**Community Impact**: A violation through a single incident or series

|

||||||

|

of actions.

|

||||||

|

|

||||||

|

**Consequence**: A warning with consequences for continued behavior. No

|

||||||

|

interaction with the people involved, including unsolicited interaction with

|

||||||

|

those enforcing the Code of Conduct, for a specified period of time. This

|

||||||

|

includes avoiding interactions in community spaces as well as external channels

|

||||||

|

like social media. Violating these terms may lead to a temporary or

|

||||||

|

permanent ban.

|

||||||

|

|

||||||

|

### 3. Temporary Ban

|

||||||

|

|

||||||

|

**Community Impact**: A serious violation of community standards, including

|

||||||

|

sustained inappropriate behavior.

|

||||||

|

|

||||||

|

**Consequence**: A temporary ban from any sort of interaction or public

|

||||||

|

communication with the community for a specified period of time. No public or

|

||||||

|

private interaction with the people involved, including unsolicited interaction

|

||||||

|

with those enforcing the Code of Conduct, is allowed during this period.

|

||||||

|

Violating these terms may lead to a permanent ban.

|

||||||

|

|

||||||

|

### 4. Permanent Ban

|

||||||

|

|

||||||

|

**Community Impact**: Demonstrating a pattern of violation of community

|

||||||

|

standards, including sustained inappropriate behavior, harassment of an

|

||||||

|

individual, or aggression toward or disparagement of classes of individuals.

|

||||||

|

|

||||||

|

**Consequence**: A permanent ban from any sort of public interaction within

|

||||||

|

the community.

|

||||||

|

|

||||||

|

## Attribution

|

||||||

|

|

||||||

|

This Code of Conduct is adapted from the [Contributor Covenant][homepage],

|

||||||

|

version 2.0, available at

|

||||||

|

https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

|

||||||

|

|

||||||

|

Community Impact Guidelines were inspired by [Mozilla's code of conduct

|

||||||

|

enforcement ladder](https://github.com/mozilla/diversity).

|

||||||

|

|

||||||

|

[homepage]: https://www.contributor-covenant.org

|

||||||

|

|

||||||

|

For answers to common questions about this code of conduct, see the FAQ at

|

||||||

|

https://www.contributor-covenant.org/faq. Translations are available at

|

||||||

|

https://www.contributor-covenant.org/translations.

|

||||||

@@ -38,7 +38,7 @@ Thank you! We'll try to respond as quickly as possible.

|

|||||||

## Contributing Code

|

## Contributing Code

|

||||||

|

|

||||||

1. Fork this repo

|

1. Fork this repo

|

||||||

2. Download your fork `git clone https://github.com/your-username/asynq && cd asynq`

|

2. Download your fork `git clone git@github.com:your-username/asynq.git && cd asynq`

|

||||||

3. Create your branch `git checkout -b your-branch-name`

|

3. Create your branch `git checkout -b your-branch-name`

|

||||||

4. Make and commit your changes

|

4. Make and commit your changes

|

||||||

5. Push the branch `git push origin your-branch-name`

|

5. Push the branch `git push origin your-branch-name`

|

||||||

|

|||||||

6

Makefile

6

Makefile

@@ -4,4 +4,8 @@ proto: internal/proto/asynq.proto

|

|||||||

protoc -I=$(ROOT_DIR)/internal/proto \

|

protoc -I=$(ROOT_DIR)/internal/proto \

|

||||||

--go_out=$(ROOT_DIR)/internal/proto \

|

--go_out=$(ROOT_DIR)/internal/proto \

|

||||||

--go_opt=module=github.com/hibiken/asynq/internal/proto \

|

--go_opt=module=github.com/hibiken/asynq/internal/proto \

|

||||||

$(ROOT_DIR)/internal/proto/asynq.proto

|

$(ROOT_DIR)/internal/proto/asynq.proto

|

||||||

|

|

||||||

|

.PHONY: lint

|

||||||

|

lint:

|

||||||

|

golangci-lint run

|

||||||

|

|||||||

31

README.md

31

README.md

@@ -33,23 +33,30 @@ Task queues are used as a mechanism to distribute work across multiple machines.

|

|||||||

- Low latency to add a task since writes are fast in Redis

|

- Low latency to add a task since writes are fast in Redis

|

||||||

- De-duplication of tasks using [unique option](https://github.com/hibiken/asynq/wiki/Unique-Tasks)

|

- De-duplication of tasks using [unique option](https://github.com/hibiken/asynq/wiki/Unique-Tasks)

|

||||||

- Allow [timeout and deadline per task](https://github.com/hibiken/asynq/wiki/Task-Timeout-and-Cancelation)

|

- Allow [timeout and deadline per task](https://github.com/hibiken/asynq/wiki/Task-Timeout-and-Cancelation)

|

||||||

|

- Allow [aggregating group of tasks](https://github.com/hibiken/asynq/wiki/Task-aggregation) to batch multiple successive operations

|

||||||

- [Flexible handler interface with support for middlewares](https://github.com/hibiken/asynq/wiki/Handler-Deep-Dive)

|

- [Flexible handler interface with support for middlewares](https://github.com/hibiken/asynq/wiki/Handler-Deep-Dive)

|

||||||

- [Ability to pause queue](/tools/asynq/README.md#pause) to stop processing tasks from the queue

|

- [Ability to pause queue](/tools/asynq/README.md#pause) to stop processing tasks from the queue

|

||||||

- [Periodic Tasks](https://github.com/hibiken/asynq/wiki/Periodic-Tasks)

|

- [Periodic Tasks](https://github.com/hibiken/asynq/wiki/Periodic-Tasks)

|

||||||

- [Support Redis Cluster](https://github.com/hibiken/asynq/wiki/Redis-Cluster) for automatic sharding and high availability

|

|

||||||

- [Support Redis Sentinels](https://github.com/hibiken/asynq/wiki/Automatic-Failover) for high availability

|

- [Support Redis Sentinels](https://github.com/hibiken/asynq/wiki/Automatic-Failover) for high availability

|

||||||

|

- Integration with [Prometheus](https://prometheus.io/) to collect and visualize queue metrics

|

||||||

- [Web UI](#web-ui) to inspect and remote-control queues and tasks

|

- [Web UI](#web-ui) to inspect and remote-control queues and tasks

|

||||||

- [CLI](#command-line-tool) to inspect and remote-control queues and tasks

|

- [CLI](#command-line-tool) to inspect and remote-control queues and tasks

|

||||||

|

|

||||||

## Stability and Compatibility

|

## Stability and Compatibility

|

||||||

|

|

||||||

**Status**: The library is currently undergoing **heavy development** with frequent, breaking API changes.

|

**Status**: The library relatively stable and is currently undergoing **moderate development** with less frequent breaking API changes.

|

||||||

|

|

||||||

> ☝️ **Important Note**: Current major version is zero (`v0.x.x`) to accomodate rapid development and fast iteration while getting early feedback from users (_feedback on APIs are appreciated!_). The public API could change without a major version update before `v1.0.0` release.

|

> ☝️ **Important Note**: Current major version is zero (`v0.x.x`) to accommodate rapid development and fast iteration while getting early feedback from users (_feedback on APIs are appreciated!_). The public API could change without a major version update before `v1.0.0` release.

|

||||||

|

|

||||||

|

### Redis Cluster Compatibility

|

||||||

|

|

||||||

|

Some of the lua scripts in this library may not be compatible with Redis Cluster.

|

||||||

|

|

||||||

|

## Sponsoring

|

||||||

|

If you are using this package in production, **please consider sponsoring the project to show your support!**

|

||||||

|

|

||||||

## Quickstart

|

## Quickstart

|

||||||

|

Make sure you have Go installed ([download](https://golang.org/dl/)). The **last two** Go versions are supported (See https://go.dev/dl).

|

||||||

Make sure you have Go installed ([download](https://golang.org/dl/)). Version `1.14` or higher is required.

|

|

||||||

|

|

||||||

Initialize your project by creating a folder and then running `go mod init github.com/your/repo` ([learn more](https://blog.golang.org/using-go-modules)) inside the folder. Then install Asynq library with the [`go get`](https://golang.org/cmd/go/#hdr-Add_dependencies_to_current_module_and_install_them) command:

|

Initialize your project by creating a folder and then running `go mod init github.com/your/repo` ([learn more](https://blog.golang.org/using-go-modules)) inside the folder. Then install Asynq library with the [`go get`](https://golang.org/cmd/go/#hdr-Add_dependencies_to_current_module_and_install_them) command:

|

||||||

|

|

||||||

@@ -65,8 +72,11 @@ Next, write a package that encapsulates task creation and task handling.

|

|||||||

package tasks

|

package tasks

|

||||||

|

|

||||||

import (

|

import (

|

||||||

|

"context"

|

||||||

|

"encoding/json"

|

||||||

"fmt"

|

"fmt"

|

||||||

|

"log"

|

||||||

|

"time"

|

||||||

"github.com/hibiken/asynq"

|

"github.com/hibiken/asynq"

|

||||||

)

|

)

|

||||||

|

|

||||||

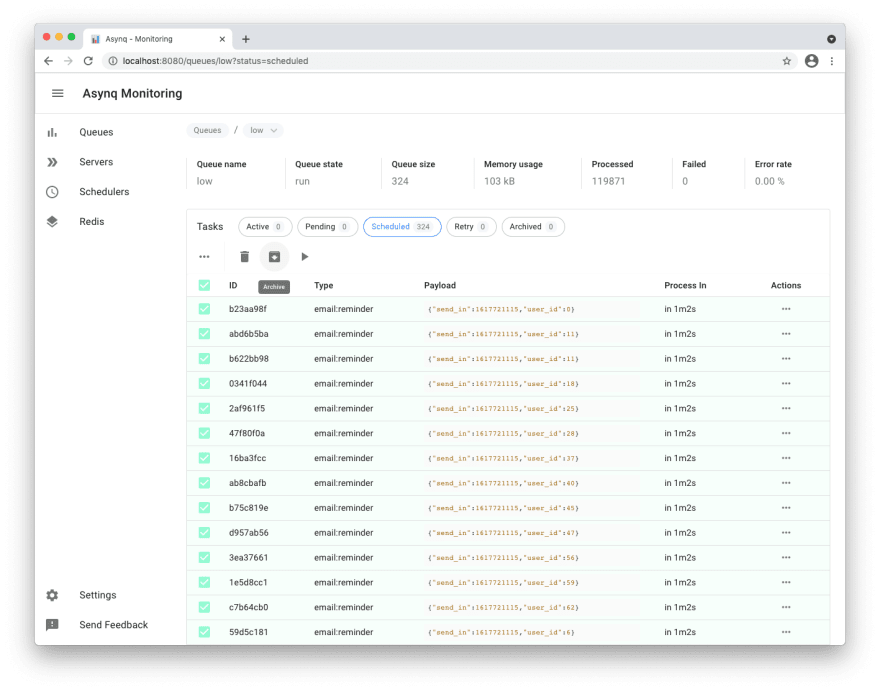

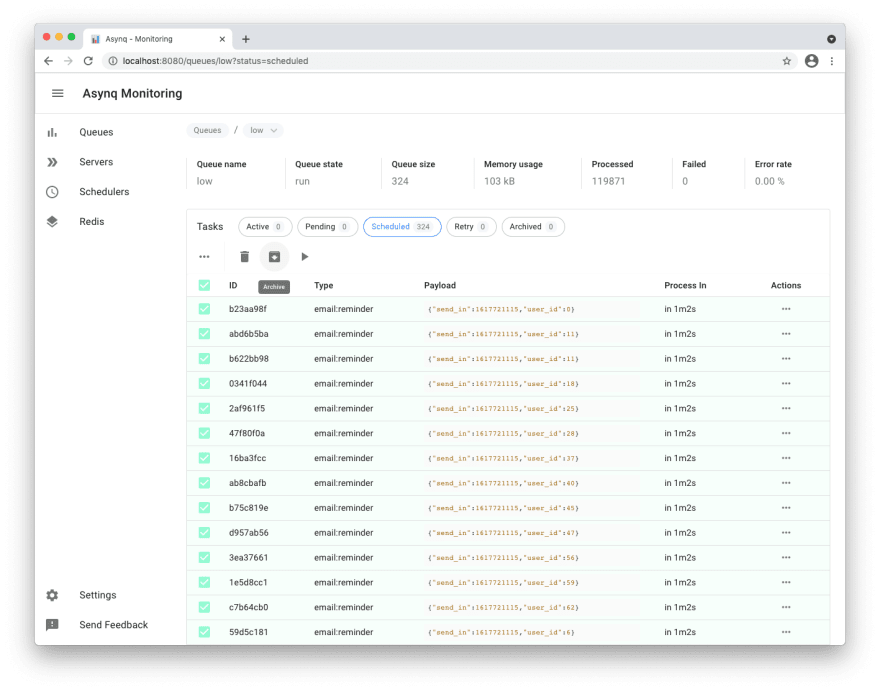

@@ -271,6 +281,9 @@ Here's a few screenshots of the Web UI:

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**Metrics view**

|

||||||

|

<img width="1532" alt="Screen Shot 2021-12-19 at 4 37 19 PM" src="https://user-images.githubusercontent.com/10953044/146777420-cae6c476-bac6-469c-acce-b2f6584e8707.png">

|

||||||

|

|

||||||

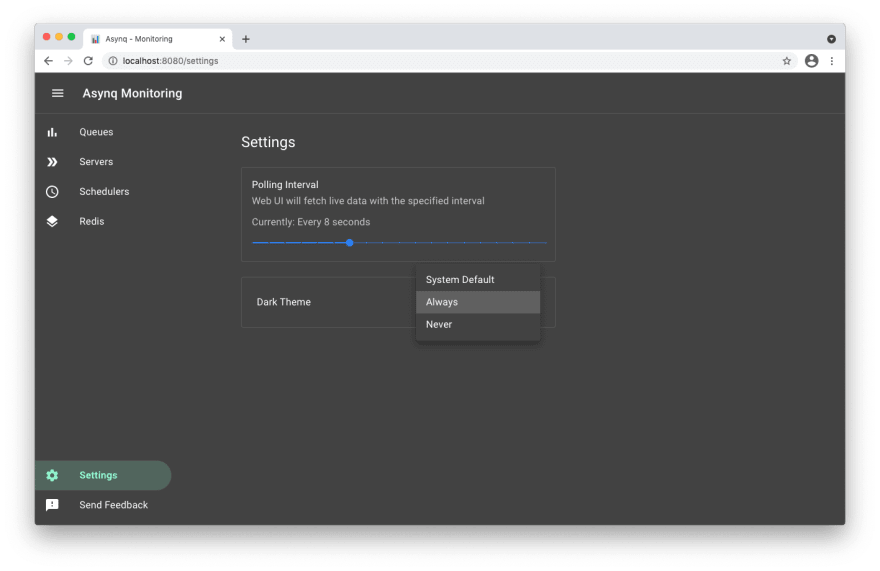

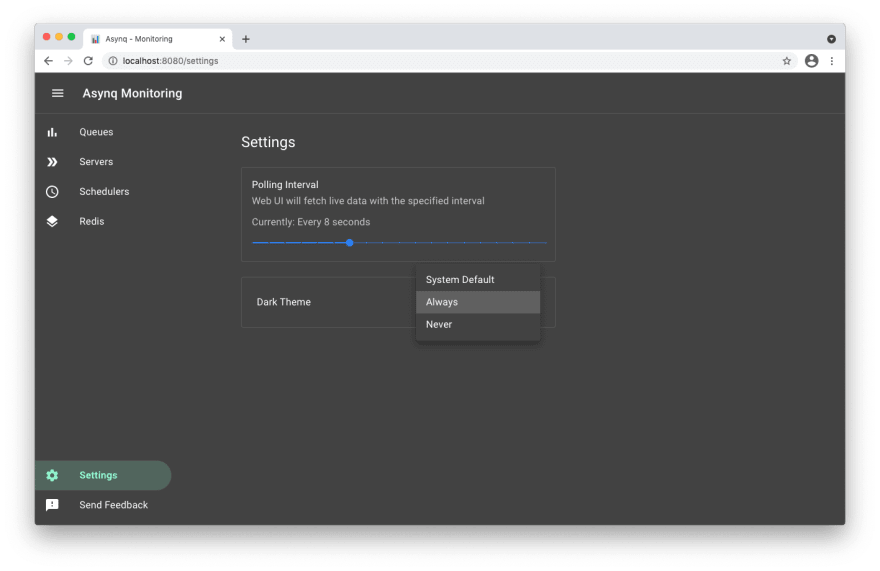

**Settings and adaptive dark mode**

|

**Settings and adaptive dark mode**

|

||||||

|

|

||||||

|

|

||||||

@@ -284,12 +297,12 @@ Asynq ships with a command line tool to inspect the state of queues and tasks.

|

|||||||

To install the CLI tool, run the following command:

|

To install the CLI tool, run the following command:

|

||||||

|

|

||||||

```sh

|

```sh

|

||||||

go get -u github.com/hibiken/asynq/tools/asynq

|

go install github.com/hibiken/asynq/tools/asynq@latest

|

||||||

```

|

```

|

||||||

|

|

||||||

Here's an example of running the `asynq stats` command:

|

Here's an example of running the `asynq dash` command:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

For details on how to use the tool, refer to the tool's [README](/tools/asynq/README.md).

|

For details on how to use the tool, refer to the tool's [README](/tools/asynq/README.md).

|

||||||

|

|

||||||

|

|||||||

176

aggregator.go

Normal file

176

aggregator.go

Normal file

@@ -0,0 +1,176 @@

|

|||||||

|

// Copyright 2022 Kentaro Hibino. All rights reserved.

|

||||||

|

// Use of this source code is governed by a MIT license

|

||||||

|

// that can be found in the LICENSE file.

|

||||||

|

|

||||||

|

package asynq

|

||||||

|

|

||||||

|

import (

|

||||||

|

"context"

|

||||||

|

"sync"

|

||||||

|

"time"

|

||||||

|

|

||||||

|

"github.com/hibiken/asynq/internal/base"

|

||||||

|

"github.com/hibiken/asynq/internal/log"

|

||||||

|

)

|

||||||

|

|

||||||

|

// An aggregator is responsible for checking groups and aggregate into one task

|

||||||

|

// if any of the grouping condition is met.

|

||||||

|

type aggregator struct {

|

||||||

|

logger *log.Logger

|

||||||

|

broker base.Broker

|

||||||

|

client *Client

|

||||||

|

|

||||||

|

// channel to communicate back to the long running "aggregator" goroutine.

|

||||||

|

done chan struct{}

|

||||||

|

|

||||||

|

// list of queue names to check and aggregate.

|

||||||

|

queues []string

|

||||||

|

|

||||||

|

// Group configurations

|

||||||

|

gracePeriod time.Duration

|

||||||

|

maxDelay time.Duration

|

||||||

|

maxSize int

|

||||||

|

|

||||||

|

// User provided group aggregator.

|

||||||

|

ga GroupAggregator

|

||||||

|

|

||||||

|

// interval used to check for aggregation

|

||||||

|

interval time.Duration

|

||||||

|

|

||||||

|

// sema is a counting semaphore to ensure the number of active aggregating function

|

||||||

|

// does not exceed the limit.

|

||||||

|

sema chan struct{}

|

||||||

|

}

|

||||||

|

|

||||||

|

type aggregatorParams struct {

|

||||||

|

logger *log.Logger

|

||||||

|

broker base.Broker

|

||||||

|

queues []string

|

||||||

|

gracePeriod time.Duration

|

||||||

|

maxDelay time.Duration

|

||||||

|

maxSize int

|

||||||

|

groupAggregator GroupAggregator

|

||||||

|

}

|

||||||

|

|

||||||

|

const (

|

||||||

|

// Maximum number of aggregation checks in flight concurrently.

|

||||||

|

maxConcurrentAggregationChecks = 3

|

||||||

|

|

||||||

|

// Default interval used for aggregation checks. If the provided gracePeriod is less than

|

||||||

|

// the default, use the gracePeriod.

|

||||||

|

defaultAggregationCheckInterval = 7 * time.Second

|

||||||

|

)

|

||||||

|

|

||||||

|

func newAggregator(params aggregatorParams) *aggregator {

|

||||||

|

interval := defaultAggregationCheckInterval

|

||||||

|

if params.gracePeriod < interval {

|

||||||

|

interval = params.gracePeriod

|

||||||

|

}

|

||||||

|

return &aggregator{

|

||||||

|

logger: params.logger,

|

||||||

|

broker: params.broker,

|

||||||

|

client: &Client{broker: params.broker},

|

||||||

|

done: make(chan struct{}),

|

||||||

|

queues: params.queues,

|

||||||

|

gracePeriod: params.gracePeriod,

|

||||||

|

maxDelay: params.maxDelay,

|

||||||

|

maxSize: params.maxSize,

|

||||||

|

ga: params.groupAggregator,

|

||||||

|

sema: make(chan struct{}, maxConcurrentAggregationChecks),

|

||||||

|

interval: interval,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

func (a *aggregator) shutdown() {

|

||||||

|

if a.ga == nil {

|

||||||

|

return

|

||||||

|

}

|

||||||

|

a.logger.Debug("Aggregator shutting down...")

|

||||||

|

// Signal the aggregator goroutine to stop.

|

||||||

|

a.done <- struct{}{}

|

||||||

|

}

|

||||||

|

|

||||||

|

func (a *aggregator) start(wg *sync.WaitGroup) {

|

||||||

|

if a.ga == nil {

|

||||||

|

return

|

||||||

|

}

|

||||||

|

wg.Add(1)

|

||||||

|

go func() {

|

||||||

|

defer wg.Done()

|

||||||

|

ticker := time.NewTicker(a.interval)

|

||||||

|

for {

|

||||||

|

select {

|

||||||

|

case <-a.done:

|

||||||

|

a.logger.Debug("Waiting for all aggregation checks to finish...")

|

||||||

|

// block until all aggregation checks released the token

|

||||||

|

for i := 0; i < cap(a.sema); i++ {

|

||||||

|

a.sema <- struct{}{}

|

||||||

|

}

|

||||||

|

a.logger.Debug("Aggregator done")

|

||||||

|

ticker.Stop()

|

||||||

|

return

|

||||||

|

case t := <-ticker.C:

|

||||||

|

a.exec(t)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}()

|

||||||

|

}

|

||||||

|

|

||||||

|

func (a *aggregator) exec(t time.Time) {

|

||||||

|

select {

|

||||||

|

case a.sema <- struct{}{}: // acquire token

|

||||||

|

go a.aggregate(t)

|

||||||

|

default:

|

||||||

|

// If the semaphore blocks, then we are currently running max number of

|

||||||

|

// aggregation checks. Skip this round and log warning.

|

||||||

|

a.logger.Warnf("Max number of aggregation checks in flight. Skipping")

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

func (a *aggregator) aggregate(t time.Time) {

|

||||||

|

defer func() { <-a.sema /* release token */ }()

|

||||||

|

for _, qname := range a.queues {

|

||||||

|

groups, err := a.broker.ListGroups(qname)

|

||||||

|

if err != nil {

|

||||||

|

a.logger.Errorf("Failed to list groups in queue: %q", qname)

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

for _, gname := range groups {

|

||||||

|

aggregationSetID, err := a.broker.AggregationCheck(

|

||||||

|

qname, gname, t, a.gracePeriod, a.maxDelay, a.maxSize)

|

||||||

|

if err != nil {

|

||||||

|

a.logger.Errorf("Failed to run aggregation check: queue=%q group=%q", qname, gname)

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

if aggregationSetID == "" {

|

||||||

|

a.logger.Debugf("No aggregation needed at this time: queue=%q group=%q", qname, gname)

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

|

||||||

|

// Aggregate and enqueue.

|

||||||

|

msgs, deadline, err := a.broker.ReadAggregationSet(qname, gname, aggregationSetID)

|

||||||

|

if err != nil {

|

||||||

|

a.logger.Errorf("Failed to read aggregation set: queue=%q, group=%q, setID=%q",

|

||||||

|

qname, gname, aggregationSetID)

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

tasks := make([]*Task, len(msgs))

|

||||||

|

for i, m := range msgs {

|

||||||

|

tasks[i] = NewTask(m.Type, m.Payload)

|

||||||

|

}

|

||||||

|

aggregatedTask := a.ga.Aggregate(gname, tasks)

|

||||||

|

ctx, cancel := context.WithDeadline(context.Background(), deadline)

|

||||||

|

if _, err := a.client.EnqueueContext(ctx, aggregatedTask, Queue(qname)); err != nil {

|

||||||

|

a.logger.Errorf("Failed to enqueue aggregated task (queue=%q, group=%q, setID=%q): %v",

|

||||||

|

qname, gname, aggregationSetID, err)

|

||||||

|

cancel()

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

if err := a.broker.DeleteAggregationSet(ctx, qname, gname, aggregationSetID); err != nil {

|

||||||

|

a.logger.Warnf("Failed to delete aggregation set: queue=%q, group=%q, setID=%q",

|

||||||

|

qname, gname, aggregationSetID)

|

||||||

|

}

|

||||||

|

cancel()

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

165

aggregator_test.go

Normal file

165

aggregator_test.go

Normal file

@@ -0,0 +1,165 @@

|

|||||||

|

// Copyright 2022 Kentaro Hibino. All rights reserved.

|

||||||

|

// Use of this source code is governed by a MIT license

|

||||||

|

// that can be found in the LICENSE file.

|

||||||

|

|

||||||

|

package asynq

|

||||||

|

|

||||||

|

import (

|

||||||

|

"sync"

|

||||||

|

"testing"

|

||||||

|

"time"

|

||||||

|

|

||||||

|

"github.com/google/go-cmp/cmp"

|

||||||

|

"github.com/hibiken/asynq/internal/base"

|

||||||

|

"github.com/hibiken/asynq/internal/rdb"

|

||||||

|

h "github.com/hibiken/asynq/internal/testutil"

|

||||||

|

)

|

||||||

|

|

||||||

|

func TestAggregator(t *testing.T) {

|

||||||

|

r := setup(t)

|

||||||

|

defer r.Close()

|

||||||

|

rdbClient := rdb.NewRDB(r)

|

||||||

|

client := Client{broker: rdbClient}

|

||||||

|

|

||||||

|

tests := []struct {

|

||||||

|

desc string

|

||||||

|

gracePeriod time.Duration

|

||||||

|

maxDelay time.Duration

|

||||||

|

maxSize int

|

||||||

|

aggregateFunc func(gname string, tasks []*Task) *Task

|

||||||

|

tasks []*Task // tasks to enqueue

|

||||||

|

enqueueFrequency time.Duration // time between one enqueue event to another

|

||||||

|

waitTime time.Duration // time to wait

|

||||||

|

wantGroups map[string]map[string][]base.Z

|

||||||

|

wantPending map[string][]*base.TaskMessage

|

||||||

|

}{

|

||||||

|

{

|

||||||

|

desc: "group older than the grace period should be aggregated",

|

||||||

|

gracePeriod: 1 * time.Second,

|

||||||

|

maxDelay: 0, // no maxdelay limit

|

||||||

|

maxSize: 0, // no maxsize limit

|

||||||

|

aggregateFunc: func(gname string, tasks []*Task) *Task {

|

||||||

|

return NewTask(gname, nil, MaxRetry(len(tasks))) // use max retry to see how many tasks were aggregated

|

||||||

|

},

|

||||||

|

tasks: []*Task{

|

||||||

|

NewTask("task1", nil, Group("mygroup")),

|

||||||

|

NewTask("task2", nil, Group("mygroup")),

|

||||||

|

NewTask("task3", nil, Group("mygroup")),

|

||||||

|

},

|

||||||

|

enqueueFrequency: 300 * time.Millisecond,

|

||||||

|

waitTime: 3 * time.Second,

|

||||||

|

wantGroups: map[string]map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

"mygroup": {},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

wantPending: map[string][]*base.TaskMessage{

|

||||||

|

"default": {

|

||||||

|

h.NewTaskMessageBuilder().SetType("mygroup").SetRetry(3).Build(),

|

||||||

|

},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

{

|

||||||

|

desc: "group older than the max-delay should be aggregated",

|

||||||

|

gracePeriod: 2 * time.Second,

|

||||||

|

maxDelay: 4 * time.Second,

|

||||||

|

maxSize: 0, // no maxsize limit

|

||||||

|

aggregateFunc: func(gname string, tasks []*Task) *Task {

|

||||||

|

return NewTask(gname, nil, MaxRetry(len(tasks))) // use max retry to see how many tasks were aggregated

|

||||||

|

},

|

||||||

|

tasks: []*Task{

|

||||||

|

NewTask("task1", nil, Group("mygroup")), // time 0

|

||||||

|

NewTask("task2", nil, Group("mygroup")), // time 1s

|

||||||

|

NewTask("task3", nil, Group("mygroup")), // time 2s

|

||||||

|

NewTask("task4", nil, Group("mygroup")), // time 3s

|

||||||

|

},

|

||||||

|

enqueueFrequency: 1 * time.Second,

|

||||||

|

waitTime: 4 * time.Second,

|

||||||

|

wantGroups: map[string]map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

"mygroup": {},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

wantPending: map[string][]*base.TaskMessage{

|

||||||

|

"default": {

|

||||||

|

h.NewTaskMessageBuilder().SetType("mygroup").SetRetry(4).Build(),

|

||||||

|

},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

{

|

||||||

|

desc: "group reached the max-size should be aggregated",

|

||||||

|

gracePeriod: 1 * time.Minute,

|

||||||

|

maxDelay: 0, // no maxdelay limit

|

||||||

|

maxSize: 5,

|

||||||

|

aggregateFunc: func(gname string, tasks []*Task) *Task {

|

||||||

|

return NewTask(gname, nil, MaxRetry(len(tasks))) // use max retry to see how many tasks were aggregated

|

||||||

|

},

|

||||||

|

tasks: []*Task{

|

||||||

|

NewTask("task1", nil, Group("mygroup")),

|

||||||

|

NewTask("task2", nil, Group("mygroup")),

|

||||||

|

NewTask("task3", nil, Group("mygroup")),

|

||||||

|

NewTask("task4", nil, Group("mygroup")),

|

||||||

|

NewTask("task5", nil, Group("mygroup")),

|

||||||

|

},

|

||||||

|

enqueueFrequency: 300 * time.Millisecond,

|

||||||

|

waitTime: defaultAggregationCheckInterval * 2,

|

||||||

|

wantGroups: map[string]map[string][]base.Z{

|

||||||

|

"default": {

|

||||||

|

"mygroup": {},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

wantPending: map[string][]*base.TaskMessage{

|

||||||

|

"default": {

|

||||||

|

h.NewTaskMessageBuilder().SetType("mygroup").SetRetry(5).Build(),

|

||||||

|

},

|

||||||

|

},

|

||||||

|

},

|

||||||

|

}

|

||||||

|

|

||||||

|

for _, tc := range tests {

|

||||||

|

h.FlushDB(t, r)

|

||||||

|

|

||||||

|

aggregator := newAggregator(aggregatorParams{

|

||||||

|

logger: testLogger,

|

||||||

|

broker: rdbClient,

|

||||||

|

queues: []string{"default"},

|

||||||

|

gracePeriod: tc.gracePeriod,

|

||||||

|

maxDelay: tc.maxDelay,

|

||||||

|

maxSize: tc.maxSize,

|

||||||

|

groupAggregator: GroupAggregatorFunc(tc.aggregateFunc),

|

||||||

|

})

|

||||||

|

|

||||||

|

var wg sync.WaitGroup

|

||||||

|

aggregator.start(&wg)

|

||||||

|

|

||||||

|

for _, task := range tc.tasks {

|

||||||

|

if _, err := client.Enqueue(task); err != nil {

|

||||||

|

t.Errorf("%s: Client Enqueue failed: %v", tc.desc, err)

|

||||||

|

aggregator.shutdown()

|

||||||

|

wg.Wait()

|

||||||

|

continue

|

||||||

|

}

|

||||||

|

time.Sleep(tc.enqueueFrequency)

|

||||||

|

}

|

||||||

|

|

||||||

|

time.Sleep(tc.waitTime)

|

||||||

|

|

||||||

|

for qname, groups := range tc.wantGroups {

|

||||||

|

for gname, want := range groups {

|

||||||

|

gotGroup := h.GetGroupEntries(t, r, qname, gname)

|

||||||

|

if diff := cmp.Diff(want, gotGroup, h.SortZSetEntryOpt); diff != "" {

|

||||||

|

t.Errorf("%s: mismatch found in %q; (-want,+got)\n%s", tc.desc, base.GroupKey(qname, gname), diff)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

for qname, want := range tc.wantPending {

|

||||||

|

gotPending := h.GetPendingMessages(t, r, qname)

|

||||||

|

if diff := cmp.Diff(want, gotPending, h.SortMsgOpt, h.IgnoreIDOpt); diff != "" {

|

||||||

|

t.Errorf("%s: mismatch found in %q; (-want,+got)\n%s", tc.desc, base.PendingKey(qname), diff)

|

||||||

|

}

|

||||||

|

}

|

||||||

|

aggregator.shutdown()

|

||||||

|

wg.Wait()

|

||||||

|

}

|

||||||

|

}

|

||||||

55

asynq.go

55

asynq.go

@@ -8,12 +8,13 @@ import (

|

|||||||

"context"

|

"context"

|

||||||

"crypto/tls"

|

"crypto/tls"

|

||||||

"fmt"

|

"fmt"

|

||||||

|

"net"

|

||||||

"net/url"

|

"net/url"

|

||||||

"strconv"

|

"strconv"

|

||||||

"strings"

|