mirror of

https://github.com/hibiken/asynq.git

synced 2025-10-24 10:36:12 +08:00

Compare commits

285 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

05534c6f24 | ||

|

|

f0db219f6a | ||

|

|

3ae0e7f528 | ||

|

|

421dc584ff | ||

|

|

cfd1a1dfe8 | ||

|

|

c197902dc0 | ||

|

|

e6355bf3f5 | ||

|

|

95c90a5cb8 | ||

|

|

6817af366a | ||

|

|

4bce28d677 | ||

|

|

73f930313c | ||

|

|

bff2a05d59 | ||

|

|

684a7e0c98 | ||

|

|

46b23d6495 | ||

|

|

c0ae62499f | ||

|

|

7744ade362 | ||

|

|

f532c95394 | ||

|

|

ff6768f9bb | ||

|

|

d5e9f3b1bd | ||

|

|

d02b722d8a | ||

|

|

99c7ebeef2 | ||

|

|

bf54621196 | ||

|

|

27baf6de0d | ||

|

|

1bd0bee1e5 | ||

|

|

a9feec5967 | ||

|

|

e01c6379c8 | ||

|

|

a0df047f71 | ||

|

|

68dd6d9a9d | ||

|

|

6cce31a134 | ||

|

|

f9d7af3def | ||

|

|

b0321fb465 | ||

|

|

7776c7ae53 | ||

|

|

709ca79a2b | ||

|

|

08d8f0b37c | ||

|

|

385323b679 | ||

|

|

77604af265 | ||

|

|

4765742e8a | ||

|

|

68839dc9d3 | ||

|

|

8922d2423a | ||

|

|

b358de907e | ||

|

|

8ee1825e67 | ||

|

|

c8bda26bed | ||

|

|

8aeeb61c9d | ||

|

|

96c51fdc23 | ||

|

|

ea9086fd8b | ||

|

|

e63d51da0c | ||

|

|

cd351d49b9 | ||

|

|

87264b66f3 | ||

|

|

62168b8d0d | ||

|

|

840f7245b1 | ||

|

|

12f4c7cf6e | ||

|

|

0ec3b55e6b | ||

|

|

4bcc5ab6aa | ||

|

|

456edb6b71 | ||

|

|

b835090ad8 | ||

|

|

09cbea66f6 | ||

|

|

b9c2572203 | ||

|

|

0bf767cf21 | ||

|

|

1812d05d21 | ||

|

|

4af65d5fa5 | ||

|

|

a19ad19382 | ||

|

|

8117ce8972 | ||

|

|

d98ecdebb4 | ||

|

|

ffe9aa74b3 | ||

|

|

d2d4029aba | ||

|

|

76bd865ebc | ||

|

|

136d1c9ea9 | ||

|

|

52e04355d3 | ||

|

|

cde3e57c6c | ||

|

|

dd66acef1b | ||

|

|

30a3d9641a | ||

|

|

961582cba6 | ||

|

|

430dbb298e | ||

|

|

675826be5f | ||

|

|

62f4e46b73 | ||

|

|

a500f8a534 | ||

|

|

bcfeff38ed | ||

|

|

12a90f6a8d | ||

|

|

807624e7dd | ||

|

|

4d65024bd7 | ||

|

|

76486b5cb4 | ||

|

|

1db516c53c | ||

|

|

cb5bdf245c | ||

|

|

267493ccef | ||

|

|

5d7f1b6a80 | ||

|

|

77ded502ab | ||

|

|

f2284be43d | ||

|

|

3cadab55cb | ||

|

|

298a420f9f | ||

|

|

b1d717c842 | ||

|

|

56e5762eea | ||

|

|

5ec41e388b | ||

|

|

9c95c41651 | ||

|

|

476812475e | ||

|

|

7af3981929 | ||

|

|

2516c4baba | ||

|

|

ebe482a65c | ||

|

|

3e9fc2f972 | ||

|

|

63ce9ed0f9 | ||

|

|

32d3f329b9 | ||

|

|

544c301a8b | ||

|

|

8b997d2fab | ||

|

|

901105a8d7 | ||

|

|

aaa3f1d4fd | ||

|

|

4722ca2d3d | ||

|

|

6a9d9fd717 | ||

|

|

de28c1ea19 | ||

|

|

f618f5b1f5 | ||

|

|

aa936466b3 | ||

|

|

5d1ec70544 | ||

|

|

d1d3be9b00 | ||

|

|

bc77f6fe14 | ||

|

|

efe197a47b | ||

|

|

97b5516183 | ||

|

|

8eafa03ca7 | ||

|

|

430b01c9aa | ||

|

|

14c381dc40 | ||

|

|

e13122723a | ||

|

|

eba7c4e085 | ||

|

|

bfde0b6283 | ||

|

|

afde6a7266 | ||

|

|

6529a1e0b1 | ||

|

|

c9a6ab8ae1 | ||

|

|

557c1a5044 | ||

|

|

0236eb9a1c | ||

|

|

3c2b2cf4a3 | ||

|

|

04df71198d | ||

|

|

2884044e75 | ||

|

|

3719fad396 | ||

|

|

42c7ac0746 | ||

|

|

d331ff055d | ||

|

|

ccb682853e | ||

|

|

7c3ad9e45c | ||

|

|

ea23db4f6b | ||

|

|

00a25ca570 | ||

|

|

7235041128 | ||

|

|

a150d18ed7 | ||

|

|

0712e90f23 | ||

|

|

c5100a9c23 | ||

|

|

196d66f221 | ||

|

|

38509e309f | ||

|

|

f4dd8fe962 | ||

|

|

c06e9de97d | ||

|

|

52d536a8f5 | ||

|

|

f9c0673116 | ||

|

|

b604d25937 | ||

|

|

dfdf530a24 | ||

|

|

e9239260ae | ||

|

|

8f9d5a3352 | ||

|

|

c4dc993241 | ||

|

|

37dfd746d4 | ||

|

|

8d6e4167ab | ||

|

|

476862dd7b | ||

|

|

dcd873fa2a | ||

|

|

2604bb2192 | ||

|

|

942345ee80 | ||

|

|

1f059eeee1 | ||

|

|

4ae73abdaa | ||

|

|

96b2318300 | ||

|

|

8312515e64 | ||

|

|

50e7f38365 | ||

|

|

fadcae76d6 | ||

|

|

a2d4ead989 | ||

|

|

82b6828f43 | ||

|

|

3114987428 | ||

|

|

1ee3b10104 | ||

|

|

6d720d6a05 | ||

|

|

3e6981170d | ||

|

|

a9aa480551 | ||

|

|

9d41de795a | ||

|

|

c43fb21a0a | ||

|

|

a293efcdab | ||

|

|

69d7ec725a | ||

|

|

450a9aa1e2 | ||

|

|

6e294a7013 | ||

|

|

c26b7469bd | ||

|

|

818c2d6f35 | ||

|

|

e09870a08a | ||

|

|

ac3d5b126a | ||

|

|

29e542e591 | ||

|

|

a891ce5568 | ||

|

|

ebe3c4083f | ||

|

|

c8c47fcbf0 | ||

|

|

cca680a7fd | ||

|

|

8076b5ae50 | ||

|

|

a42c174dae | ||

|

|

a88325cb96 | ||

|

|

eb739a0258 | ||

|

|

a9c31553b8 | ||

|

|

dab8295883 | ||

|

|

131ac823fd | ||

|

|

4897dba397 | ||

|

|

6b96459881 | ||

|

|

572eb338d5 | ||

|

|

27f4027447 | ||

|

|

ee1afd12f5 | ||

|

|

3ac548e97c | ||

|

|

f38f94b947 | ||

|

|

d6f389e63f | ||

|

|

118ef27bf2 | ||

|

|

fad0696828 | ||

|

|

4037b41479 | ||

|

|

96f23d88cd | ||

|

|

83bdca5220 | ||

|

|

2f226dfb84 | ||

|

|

3f26122ac0 | ||

|

|

2a18181501 | ||

|

|

aa2676bb57 | ||

|

|

9348a62691 | ||

|

|

f59de9ac56 | ||

|

|

996a6c0ead | ||

|

|

47e9ba4eba | ||

|

|

dbf140a767 | ||

|

|

5f82b4b365 | ||

|

|

44a3d177f0 | ||

|

|

24b13bd865 | ||

|

|

d25090c669 | ||

|

|

b5caefd663 | ||

|

|

becd26479b | ||

|

|

4b81b91d3e | ||

|

|

8e23b865e9 | ||

|

|

a873d488ee | ||

|

|

e0a8f1252a | ||

|

|

650d7fdbe9 | ||

|

|

f6d504939e | ||

|

|

74f08795f8 | ||

|

|

35b2b1782e | ||

|

|

f63dcce0c0 | ||

|

|

565f86ee4f | ||

|

|

94aa878060 | ||

|

|

50b6034bf9 | ||

|

|

154113d0d0 | ||

|

|

669c7995c4 | ||

|

|

6d6a301379 | ||

|

|

53f9475582 | ||

|

|

e8fdbc5a72 | ||

|

|

5f06c308f0 | ||

|

|

a913e6d73f | ||

|

|

6978e93080 | ||

|

|

92d77bbc6e | ||

|

|

a28f61f313 | ||

|

|

9bd3d8e19e | ||

|

|

7382e2aeb8 | ||

|

|

007fac8055 | ||

|

|

8d43fe407a | ||

|

|

34b90ecc8a | ||

|

|

8b60e6a268 | ||

|

|

486dcd799b | ||

|

|

195f4603bb | ||

|

|

2e2c9b9f6b | ||

|

|

199bf4d66a | ||

|

|

7e942ec241 | ||

|

|

379da8f7a2 | ||

|

|

feee87adda | ||

|

|

7657f560ec | ||

|

|

7c7de0d8e0 | ||

|

|

83f1e20d74 | ||

|

|

4e8ac151ae | ||

|

|

08b71672aa | ||

|

|

92af00f9fd | ||

|

|

113451ce6a | ||

|

|

9cd9f3d6b4 | ||

|

|

7b9119c703 | ||

|

|

9b05dea394 | ||

|

|

6cc5bafaba | ||

|

|

716d3d987e | ||

|

|

0527b93432 | ||

|

|

5dddc35d7c | ||

|

|

4e5f596910 | ||

|

|

8bf5917cd9 | ||

|

|

7f30fa2bb6 | ||

|

|

ade6e61f51 | ||

|

|

a2abeedaa0 | ||

|

|

81bb52b08c | ||

|

|

bc2a7635a0 | ||

|

|

f65d408bf9 | ||

|

|

4749b4bbfc | ||

|

|

06c4a1c7f8 | ||

|

|

8af4cbad51 | ||

|

|

4e800a7f68 | ||

|

|

d6a5c84dc6 | ||

|

|

363cfedb49 | ||

|

|

4595bd41c3 | ||

|

|

e236d55477 | ||

|

|

a38f628f3b |

82

.github/workflows/benchstat.yml

vendored

Normal file

82

.github/workflows/benchstat.yml

vendored

Normal file

@@ -0,0 +1,82 @@

|

|||||||

|

# This workflow runs benchmarks against the current branch,

|

||||||

|

# compares them to benchmarks against master,

|

||||||

|

# and uploads the results as an artifact.

|

||||||

|

|

||||||

|

name: benchstat

|

||||||

|

|

||||||

|

on: [pull_request]

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

incoming:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

services:

|

||||||

|

redis:

|

||||||

|

image: redis

|

||||||

|

ports:

|

||||||

|

- 6379:6379

|

||||||

|

steps:

|

||||||

|

- name: Checkout

|

||||||

|

uses: actions/checkout@v2

|

||||||

|

- name: Set up Go

|

||||||

|

uses: actions/setup-go@v2

|

||||||

|

with:

|

||||||

|

go-version: 1.16.x

|

||||||

|

- name: Benchmark

|

||||||

|

run: go test -run=^$ -bench=. -count=5 -timeout=60m ./... | tee -a new.txt

|

||||||

|

- name: Upload Benchmark

|

||||||

|

uses: actions/upload-artifact@v2

|

||||||

|

with:

|

||||||

|

name: bench-incoming

|

||||||

|

path: new.txt

|

||||||

|

|

||||||

|

current:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

services:

|

||||||

|

redis:

|

||||||

|

image: redis

|

||||||

|

ports:

|

||||||

|

- 6379:6379

|

||||||

|

steps:

|

||||||

|

- name: Checkout

|

||||||

|

uses: actions/checkout@v2

|

||||||

|

with:

|

||||||

|

ref: master

|

||||||

|

- name: Set up Go

|

||||||

|

uses: actions/setup-go@v2

|

||||||

|

with:

|

||||||

|

go-version: 1.15.x

|

||||||

|

- name: Benchmark

|

||||||

|

run: go test -run=^$ -bench=. -count=5 -timeout=60m ./... | tee -a old.txt

|

||||||

|

- name: Upload Benchmark

|

||||||

|

uses: actions/upload-artifact@v2

|

||||||

|

with:

|

||||||

|

name: bench-current

|

||||||

|

path: old.txt

|

||||||

|

|

||||||

|

benchstat:

|

||||||

|

needs: [incoming, current]

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

steps:

|

||||||

|

- name: Checkout

|

||||||

|

uses: actions/checkout@v2

|

||||||

|

- name: Set up Go

|

||||||

|

uses: actions/setup-go@v2

|

||||||

|

with:

|

||||||

|

go-version: 1.15.x

|

||||||

|

- name: Install benchstat

|

||||||

|

run: go get -u golang.org/x/perf/cmd/benchstat

|

||||||

|

- name: Download Incoming

|

||||||

|

uses: actions/download-artifact@v2

|

||||||

|

with:

|

||||||

|

name: bench-incoming

|

||||||

|

- name: Download Current

|

||||||

|

uses: actions/download-artifact@v2

|

||||||

|

with:

|

||||||

|

name: bench-current

|

||||||

|

- name: Benchstat Results

|

||||||

|

run: benchstat old.txt new.txt | tee -a benchstat.txt

|

||||||

|

- name: Upload benchstat results

|

||||||

|

uses: actions/upload-artifact@v2

|

||||||

|

with:

|

||||||

|

name: benchstat

|

||||||

|

path: benchstat.txt

|

||||||

35

.github/workflows/build.yml

vendored

Normal file

35

.github/workflows/build.yml

vendored

Normal file

@@ -0,0 +1,35 @@

|

|||||||

|

name: build

|

||||||

|

|

||||||

|

on: [push, pull_request]

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

build:

|

||||||

|

strategy:

|

||||||

|

matrix:

|

||||||

|

os: [ubuntu-latest]

|

||||||

|

go-version: [1.13.x, 1.14.x, 1.15.x, 1.16.x]

|

||||||

|

runs-on: ${{ matrix.os }}

|

||||||

|

services:

|

||||||

|

redis:

|

||||||

|

image: redis

|

||||||

|

ports:

|

||||||

|

- 6379:6379

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v2

|

||||||

|

|

||||||

|

- name: Set up Go

|

||||||

|

uses: actions/setup-go@v2

|

||||||

|

with:

|

||||||

|

go-version: ${{ matrix.go-version }}

|

||||||

|

|

||||||

|

- name: Build

|

||||||

|

run: go build -v ./...

|

||||||

|

|

||||||

|

- name: Test

|

||||||

|

run: go test -race -v -coverprofile=coverage.txt -covermode=atomic ./...

|

||||||

|

|

||||||

|

- name: Benchmark Test

|

||||||

|

run: go test -run=^$ -bench=. -loglevel=debug ./...

|

||||||

|

|

||||||

|

- name: Upload coverage to Codecov

|

||||||

|

uses: codecov/codecov-action@v1

|

||||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -19,3 +19,6 @@

|

|||||||

|

|

||||||

# Ignore asynq config file

|

# Ignore asynq config file

|

||||||

.asynq.*

|

.asynq.*

|

||||||

|

|

||||||

|

# Ignore editor config files

|

||||||

|

.vscode

|

||||||

12

.travis.yml

12

.travis.yml

@@ -1,12 +0,0 @@

|

|||||||

language: go

|

|

||||||

go_import_path: github.com/hibiken/asynq

|

|

||||||

git:

|

|

||||||

depth: 1

|

|

||||||

go: [1.13.x, 1.14.x]

|

|

||||||

script:

|

|

||||||

- go test -race -v -coverprofile=coverage.txt -covermode=atomic ./...

|

|

||||||

services:

|

|

||||||

- redis-server

|

|

||||||

after_success:

|

|

||||||

- bash ./.travis/benchcmp.sh

|

|

||||||

- bash <(curl -s https://codecov.io/bash)

|

|

||||||

@@ -1,15 +0,0 @@

|

|||||||

if [ "${TRAVIS_PULL_REQUEST_BRANCH:-$TRAVIS_BRANCH}" != "master" ]; then

|

|

||||||

REMOTE_URL="$(git config --get remote.origin.url)";

|

|

||||||

cd ${TRAVIS_BUILD_DIR}/.. && \

|

|

||||||

git clone ${REMOTE_URL} "${TRAVIS_REPO_SLUG}-bench" && \

|

|

||||||

cd "${TRAVIS_REPO_SLUG}-bench" && \

|

|

||||||

# Benchmark master

|

|

||||||

git checkout master && \

|

|

||||||

go test -run=XXX -bench=. ./... > master.txt && \

|

|

||||||

# Benchmark feature branch

|

|

||||||

git checkout ${TRAVIS_COMMIT} && \

|

|

||||||

go test -run=XXX -bench=. ./... > feature.txt && \

|

|

||||||

go get -u golang.org/x/tools/cmd/benchcmp && \

|

|

||||||

# compare two benchmarks

|

|

||||||

benchcmp master.txt feature.txt;

|

|

||||||

fi

|

|

||||||

263

CHANGELOG.md

263

CHANGELOG.md

@@ -7,6 +7,269 @@ and this project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0

|

|||||||

|

|

||||||

## [Unreleased]

|

## [Unreleased]

|

||||||

|

|

||||||

|

## [0.18.5] - 2020-09-01

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `IsFailure` config option is added to determine whether error returned from Handler counts as a failure.

|

||||||

|

|

||||||

|

## [0.18.4] - 2020-08-17

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Scheduler methods are now thread-safe. It's now safe to call `Register` and `Unregister` concurrently.

|

||||||

|

|

||||||

|

## [0.18.3] - 2020-08-09

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- `Client.Enqueue` no longer enqueues tasks with empty typename; Error message is returned.

|

||||||

|

|

||||||

|

## [0.18.2] - 2020-07-15

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- Changed `Queue` function to not to convert the provided queue name to lowercase. Queue names are now case-sensitive.

|

||||||

|

- `QueueInfo.MemoryUsage` is now an approximate usage value.

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixed latency issue around memory usage (see https://github.com/hibiken/asynq/issues/309).

|

||||||

|

|

||||||

|

## [0.18.1] - 2020-07-04

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- Changed to execute task recovering logic when server starts up; Previously it needed to wait for a minute for task recovering logic to exeucte.

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixed task recovering logic to execute every minute

|

||||||

|

|

||||||

|

## [0.18.0] - 2021-06-29

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- NewTask function now takes array of bytes as payload.

|

||||||

|

- Task `Type` and `Payload` should be accessed by a method call.

|

||||||

|

- `Server` API has changed. Renamed `Quiet` to `Stop`. Renamed `Stop` to `Shutdown`. _Note:_ As a result of this renaming, the behavior of `Stop` has changed. Please update the exising code to call `Shutdown` where it used to call `Stop`.

|

||||||

|

- `Scheduler` API has changed. Renamed `Stop` to `Shutdown`.

|

||||||

|

- Requires redis v4.0+ for multiple field/value pair support

|

||||||

|

- `Client.Enqueue` now returns `TaskInfo`

|

||||||

|

- `Inspector.RunTaskByKey` is replaced with `Inspector.RunTask`

|

||||||

|

- `Inspector.DeleteTaskByKey` is replaced with `Inspector.DeleteTask`

|

||||||

|

- `Inspector.ArchiveTaskByKey` is replaced with `Inspector.ArchiveTask`

|

||||||

|

- `inspeq` package is removed. All types and functions from the package is moved to `asynq` package.

|

||||||

|

- `WorkerInfo` field names have changed.

|

||||||

|

- `Inspector.CancelActiveTask` is renamed to `Inspector.CancelProcessing`

|

||||||

|

|

||||||

|

## [0.17.2] - 2021-06-06

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Free unique lock when task is deleted (https://github.com/hibiken/asynq/issues/275).

|

||||||

|

|

||||||

|

## [0.17.1] - 2021-04-04

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fix bug in internal `RDB.memoryUsage` method.

|

||||||

|

|

||||||

|

## [0.17.0] - 2021-03-24

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `DialTimeout`, `ReadTimeout`, and `WriteTimeout` options are added to `RedisConnOpt`.

|

||||||

|

|

||||||

|

## [0.16.1] - 2021-03-20

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Replace `KEYS` command with `SCAN` as recommended by [redis doc](https://redis.io/commands/KEYS).

|

||||||

|

|

||||||

|

## [0.16.0] - 2021-03-10

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `Unregister` method is added to `Scheduler` to remove a registered entry.

|

||||||

|

|

||||||

|

## [0.15.0] - 2021-01-31

|

||||||

|

|

||||||

|

**IMPORTATNT**: All `Inspector` related code are moved to subpackage "github.com/hibiken/asynq/inspeq"

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- `Inspector` related code are moved to subpackage "github.com/hibken/asynq/inspeq".

|

||||||

|

- `RedisConnOpt` interface has changed slightly. If you have been passing `RedisClientOpt`, `RedisFailoverClientOpt`, or `RedisClusterClientOpt` as a pointer,

|

||||||

|

update your code to pass as a value.

|

||||||

|

- `ErrorMsg` field in `RetryTask` and `ArchivedTask` was renamed to `LastError`.

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `MaxRetry`, `Retried`, `LastError` fields were added to all task types returned from `Inspector`.

|

||||||

|

- `MemoryUsage` field was added to `QueueStats`.

|

||||||

|

- `DeleteAllPendingTasks`, `ArchiveAllPendingTasks` were added to `Inspector`

|

||||||

|

- `DeleteTaskByKey` and `ArchiveTaskByKey` now supports deleting/archiving `PendingTask`.

|

||||||

|

- asynq CLI now supports deleting/archiving pending tasks.

|

||||||

|

|

||||||

|

## [0.14.1] - 2021-01-19

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- `go.mod` file for CLI

|

||||||

|

|

||||||

|

## [0.14.0] - 2021-01-14

|

||||||

|

|

||||||

|

**IMPORTATNT**: Please run `asynq migrate` command to migrate from the previous versions.

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- Renamed `DeadTask` to `ArchivedTask`.

|

||||||

|

- Renamed the operation `Kill` to `Archive` in `Inpsector`.

|

||||||

|

- Print stack trace when Handler panics.

|

||||||

|

- Include a file name and a line number in the error message when recovering from a panic.

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `DefaultRetryDelayFunc` is now a public API, which can be used in the custom `RetryDelayFunc`.

|

||||||

|

- `SkipRetry` error is added to be used as a return value from `Handler`.

|

||||||

|

- `Servers` method is added to `Inspector`

|

||||||

|

- `CancelActiveTask` method is added to `Inspector`.

|

||||||

|

- `ListSchedulerEnqueueEvents` method is added to `Inspector`.

|

||||||

|

- `SchedulerEntries` method is added to `Inspector`.

|

||||||

|

- `DeleteQueue` method is added to `Inspector`.

|

||||||

|

|

||||||

|

## [0.13.1] - 2020-11-22

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixed processor to wait for specified time duration before forcefully shutdown workers.

|

||||||

|

|

||||||

|

## [0.13.0] - 2020-10-13

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `Scheduler` type is added to enable periodic tasks. See the godoc for its APIs and [wiki](https://github.com/hibiken/asynq/wiki/Periodic-Tasks) for the getting-started guide.

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- interface `Option` has changed. See the godoc for the new interface.

|

||||||

|

This change would have no impact as long as you are using exported functions (e.g. `MaxRetry`, `Queue`, etc)

|

||||||

|

to create `Option`s.

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `Payload.String() string` method is added

|

||||||

|

- `Payload.MarshalJSON() ([]byte, error)` method is added

|

||||||

|

|

||||||

|

## [0.12.0] - 2020-09-12

|

||||||

|

|

||||||

|

**IMPORTANT**: If you are upgrading from a previous version, please install the latest version of the CLI `go get -u github.com/hibiken/asynq/tools/asynq` and run `asynq migrate` command. No process should be writing to Redis while you run the migration command.

|

||||||

|

|

||||||

|

## The semantics of queue have changed

|

||||||

|

|

||||||

|

Previously, we called tasks that are ready to be processed _"Enqueued tasks"_, and other tasks that are scheduled to be processed in the future _"Scheduled tasks"_, etc.

|

||||||

|

We changed the semantics of _"Enqueue"_ slightly; All tasks that client pushes to Redis are _Enqueued_ to a queue. Within a queue, tasks will transition from one state to another.

|

||||||

|

Possible task states are:

|

||||||

|

|

||||||

|

- `Pending`: task is ready to be processed (previously called "Enqueued")

|

||||||

|

- `Active`: tasks is currently being processed (previously called "InProgress")

|

||||||

|

- `Scheduled`: task is scheduled to be processed in the future

|

||||||

|

- `Retry`: task failed to be processed and will be retried again in the future

|

||||||

|

- `Dead`: task has exhausted all of its retries and stored for manual inspection purpose

|

||||||

|

|

||||||

|

**These semantics change is reflected in the new `Inspector` API and CLI commands.**

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

#### `Client`

|

||||||

|

|

||||||

|

Use `ProcessIn` or `ProcessAt` option to schedule a task instead of `EnqueueIn` or `EnqueueAt`.

|

||||||

|

|

||||||

|

| Previously | v0.12.0 |

|

||||||

|

| --------------------------- | ------------------------------------------ |

|

||||||

|

| `client.EnqueueAt(t, task)` | `client.Enqueue(task, asynq.ProcessAt(t))` |

|

||||||

|

| `client.EnqueueIn(d, task)` | `client.Enqueue(task, asynq.ProcessIn(d))` |

|

||||||

|

|

||||||

|

#### `Inspector`

|

||||||

|

|

||||||

|

All Inspector methods are scoped to a queue, and the methods take `qname (string)` as the first argument.

|

||||||

|

`EnqueuedTask` is renamed to `PendingTask` and its corresponding methods.

|

||||||

|

`InProgressTask` is renamed to `ActiveTask` and its corresponding methods.

|

||||||

|

Command "Enqueue" is replaced by the verb "Run" (e.g. `EnqueueAllScheduledTasks` --> `RunAllScheduledTasks`)

|

||||||

|

|

||||||

|

#### `CLI`

|

||||||

|

|

||||||

|

CLI commands are restructured to use subcommands. Commands are organized into a few management commands:

|

||||||

|

To view details on any command, use `asynq help <command> <subcommand>`.

|

||||||

|

|

||||||

|

- `asynq stats`

|

||||||

|

- `asynq queue [ls inspect history rm pause unpause]`

|

||||||

|

- `asynq task [ls cancel delete kill run delete-all kill-all run-all]`

|

||||||

|

- `asynq server [ls]`

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

#### `RedisConnOpt`

|

||||||

|

|

||||||

|

- `RedisClusterClientOpt` is added to connect to Redis Cluster.

|

||||||

|

- `Username` field is added to all `RedisConnOpt` types in order to authenticate connection when Redis ACLs are used.

|

||||||

|

|

||||||

|

#### `Client`

|

||||||

|

|

||||||

|

- `ProcessIn(d time.Duration) Option` and `ProcessAt(t time.Time) Option` are added to replace `EnqueueIn` and `EnqueueAt` functionality.

|

||||||

|

|

||||||

|

#### `Inspector`

|

||||||

|

|

||||||

|

- `Queues() ([]string, error)` method is added to get all queue names.

|

||||||

|

- `ClusterKeySlot(qname string) (int64, error)` method is added to get queue's hash slot in Redis cluster.

|

||||||

|

- `ClusterNodes(qname string) ([]ClusterNode, error)` method is added to get a list of Redis cluster nodes for the given queue.

|

||||||

|

- `Close() error` method is added to close connection with redis.

|

||||||

|

|

||||||

|

### `Handler`

|

||||||

|

|

||||||

|

- `GetQueueName(ctx context.Context) (string, bool)` helper is added to extract queue name from a context.

|

||||||

|

|

||||||

|

## [0.11.0] - 2020-07-28

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- `Inspector` type was added to monitor and mutate state of queues and tasks.

|

||||||

|

- `HealthCheckFunc` and `HealthCheckInterval` fields were added to `Config` to allow user to specify a callback

|

||||||

|

function to check for broker connection.

|

||||||

|

|

||||||

|

## [0.10.0] - 2020-07-06

|

||||||

|

|

||||||

|

### Changed

|

||||||

|

|

||||||

|

- All tasks now requires timeout or deadline. By default, timeout is set to 30 mins.

|

||||||

|

- Tasks that exceed its deadline are automatically retried.

|

||||||

|

- Encoding schema for task message has changed. Please install the latest CLI and run `migrate` command if

|

||||||

|

you have tasks enqueued with the previous version of asynq.

|

||||||

|

- API of `(*Client).Enqueue`, `(*Client).EnqueueIn`, and `(*Client).EnqueueAt` has changed to return a `*Result`.

|

||||||

|

- API of `ErrorHandler` has changed. It now takes context as the first argument and removed `retried`, `maxRetry` from the argument list.

|

||||||

|

Use `GetRetryCount` and/or `GetMaxRetry` to get the count values.

|

||||||

|

|

||||||

|

## [0.9.4] - 2020-06-13

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixes issue of same tasks processed by more than one worker (https://github.com/hibiken/asynq/issues/90).

|

||||||

|

|

||||||

|

## [0.9.3] - 2020-06-12

|

||||||

|

|

||||||

|

### Fixed

|

||||||

|

|

||||||

|

- Fixes the JSON number overflow issue (https://github.com/hibiken/asynq/issues/166).

|

||||||

|

|

||||||

|

## [0.9.2] - 2020-06-08

|

||||||

|

|

||||||

|

### Added

|

||||||

|

|

||||||

|

- The `pause` and `unpause` commands were added to the CLI. See README for the CLI for details.

|

||||||

|

|

||||||

## [0.9.1] - 2020-05-29

|

## [0.9.1] - 2020-05-29

|

||||||

|

|

||||||

### Added

|

### Added

|

||||||

|

|||||||

@@ -45,6 +45,7 @@ Thank you! We'll try to respond as quickly as possible.

|

|||||||

6. Create a new pull request

|

6. Create a new pull request

|

||||||

|

|

||||||

Please try to keep your pull request focused in scope and avoid including unrelated commits.

|

Please try to keep your pull request focused in scope and avoid including unrelated commits.

|

||||||

|

Please run tests against redis cluster locally with `--redis_cluster` flag to ensure that code works for Redis cluster. TODO: Run tests using Redis cluster on CI.

|

||||||

|

|

||||||

After you have submitted your pull request, we'll try to get back to you as soon as possible. We may suggest some changes or improvements.

|

After you have submitted your pull request, we'll try to get back to you as soon as possible. We may suggest some changes or improvements.

|

||||||

|

|

||||||

|

|||||||

7

Makefile

Normal file

7

Makefile

Normal file

@@ -0,0 +1,7 @@

|

|||||||

|

ROOT_DIR:=$(shell dirname $(realpath $(firstword $(MAKEFILE_LIST))))

|

||||||

|

|

||||||

|

proto: internal/proto/asynq.proto

|

||||||

|

protoc -I=$(ROOT_DIR)/internal/proto \

|

||||||

|

--go_out=$(ROOT_DIR)/internal/proto \

|

||||||

|

--go_opt=module=github.com/hibiken/asynq/internal/proto \

|

||||||

|

$(ROOT_DIR)/internal/proto/asynq.proto

|

||||||

247

README.md

247

README.md

@@ -1,56 +1,64 @@

|

|||||||

# Asynq

|

<img src="https://user-images.githubusercontent.com/11155743/114697792-ffbfa580-9d26-11eb-8e5b-33bef69476dc.png" alt="Asynq logo" width="360px" />

|

||||||

|

|

||||||

|

# Simple, reliable & efficient distributed task queue in Go

|

||||||

|

|

||||||

[](https://travis-ci.com/hibiken/asynq)

|

|

||||||

[](https://opensource.org/licenses/MIT)

|

|

||||||

[](https://goreportcard.com/report/github.com/hibiken/asynq)

|

|

||||||

[](https://godoc.org/github.com/hibiken/asynq)

|

[](https://godoc.org/github.com/hibiken/asynq)

|

||||||

|

[](https://goreportcard.com/report/github.com/hibiken/asynq)

|

||||||

|

|

||||||

|

[](https://opensource.org/licenses/MIT)

|

||||||

[](https://gitter.im/go-asynq/community)

|

[](https://gitter.im/go-asynq/community)

|

||||||

[](https://codecov.io/gh/hibiken/asynq)

|

|

||||||

|

|

||||||

## Overview

|

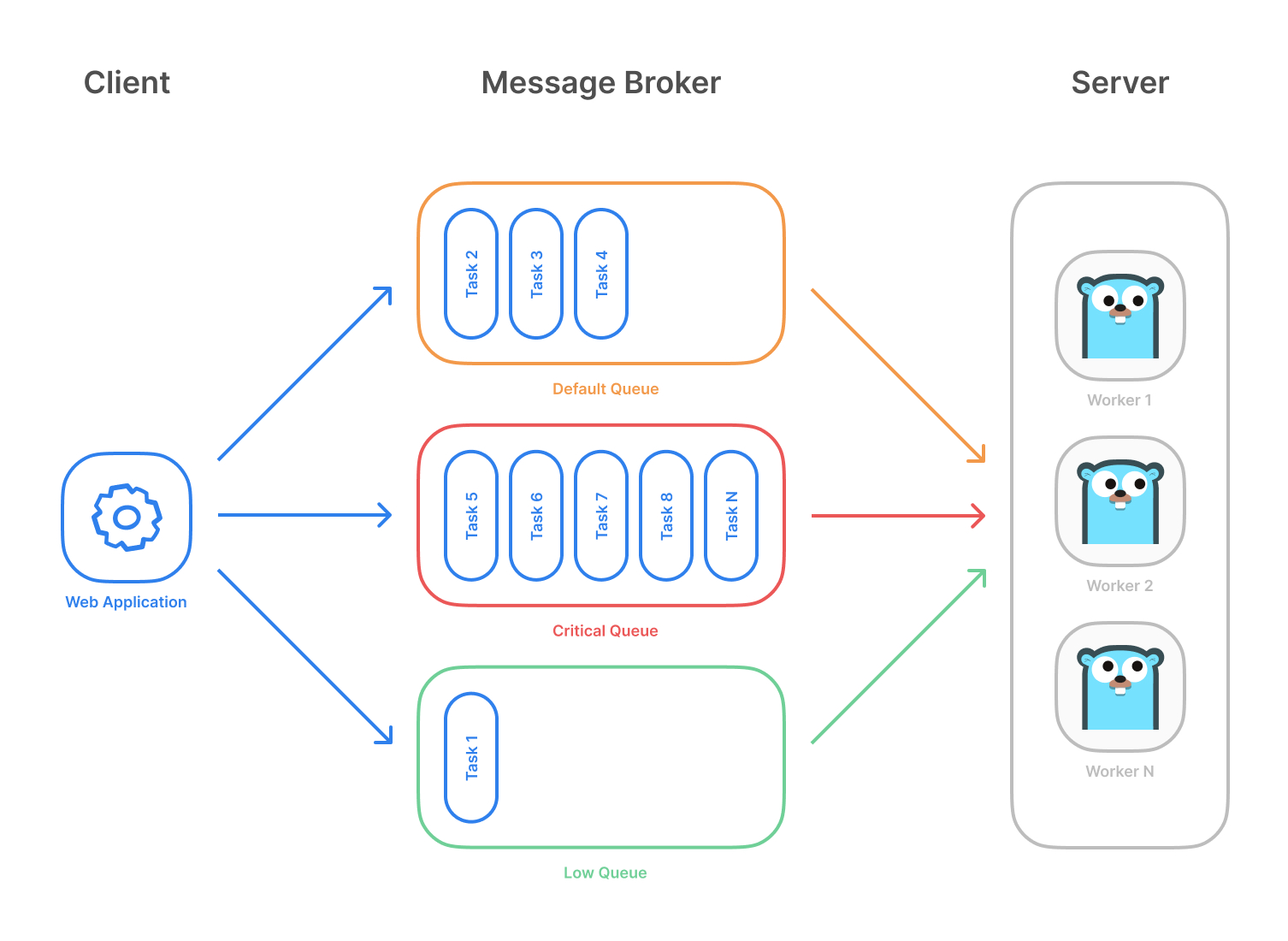

Asynq is a Go library for queueing tasks and processing them asynchronously with workers. It's backed by [Redis](https://redis.io/) and is designed to be scalable yet easy to get started.

|

||||||

|

|

||||||

Asynq is a Go library for queueing tasks and processing them in the background with workers. It is backed by Redis and it is designed to have a low barrier to entry. It should be integrated in your web stack easily.

|

|

||||||

|

|

||||||

Highlevel overview of how Asynq works:

|

Highlevel overview of how Asynq works:

|

||||||

|

|

||||||

- Client puts task on a queue

|

- Client puts tasks on a queue

|

||||||

- Server pulls task off queues and starts a worker goroutine for each task

|

- Server pulls tasks off queues and starts a worker goroutine for each task

|

||||||

- Tasks are processed concurrently by multiple workers

|

- Tasks are processed concurrently by multiple workers

|

||||||

|

|

||||||

Task queues are used as a mechanism to distribute work across multiple machines.

|

Task queues are used as a mechanism to distribute work across multiple machines. A system can consist of multiple worker servers and brokers, giving way to high availability and horizontal scaling.

|

||||||

A system can consist of multiple worker servers and brokers, giving way to high availability and horizontal scaling.

|

|

||||||

|

|

||||||

|

**Example use case**

|

||||||

|

|

||||||

## Stability and Compatibility

|

|

||||||

|

|

||||||

**Important Note**: Current major version is zero (v0.x.x) to accomodate rapid development and fast iteration while getting early feedback from users (Feedback on APIs are appreciated!). The public API could change without a major version update before v1.0.0 release.

|

|

||||||

|

|

||||||

**Status**: The library is currently undergoing heavy development with frequent, breaking API changes.

|

|

||||||

|

|

||||||

## Features

|

## Features

|

||||||

|

|

||||||

- Guaranteed [at least one execution](https://www.cloudcomputingpatterns.org/at_least_once_delivery/) of a task

|

- Guaranteed [at least one execution](https://www.cloudcomputingpatterns.org/at_least_once_delivery/) of a task

|

||||||

- Scheduling of tasks

|

- Scheduling of tasks

|

||||||

- Durability since tasks are written to Redis

|

|

||||||

- [Retries](https://github.com/hibiken/asynq/wiki/Task-Retry) of failed tasks

|

- [Retries](https://github.com/hibiken/asynq/wiki/Task-Retry) of failed tasks

|

||||||

- [Weighted priority queues](https://github.com/hibiken/asynq/wiki/Priority-Queues#weighted-priority-queues)

|

- Automatic recovery of tasks in the event of a worker crash

|

||||||

- [Strict priority queues](https://github.com/hibiken/asynq/wiki/Priority-Queues#strict-priority-queues)

|

- [Weighted priority queues](https://github.com/hibiken/asynq/wiki/Queue-Priority#weighted-priority)

|

||||||

|

- [Strict priority queues](https://github.com/hibiken/asynq/wiki/Queue-Priority#strict-priority)

|

||||||

- Low latency to add a task since writes are fast in Redis

|

- Low latency to add a task since writes are fast in Redis

|

||||||

- De-duplication of tasks using [unique option](https://github.com/hibiken/asynq/wiki/Unique-Tasks)

|

- De-duplication of tasks using [unique option](https://github.com/hibiken/asynq/wiki/Unique-Tasks)

|

||||||

- Allow [timeout and deadline per task](https://github.com/hibiken/asynq/wiki/Task-Timeout-and-Cancelation)

|

- Allow [timeout and deadline per task](https://github.com/hibiken/asynq/wiki/Task-Timeout-and-Cancelation)

|

||||||

- [Flexible handler interface with support for middlewares](https://github.com/hibiken/asynq/wiki/Handler-Deep-Dive)

|

- [Flexible handler interface with support for middlewares](https://github.com/hibiken/asynq/wiki/Handler-Deep-Dive)

|

||||||

- [Support Redis Sentinels](https://github.com/hibiken/asynq/wiki/Automatic-Failover) for HA

|

- [Ability to pause queue](/tools/asynq/README.md#pause) to stop processing tasks from the queue

|

||||||

|

- [Periodic Tasks](https://github.com/hibiken/asynq/wiki/Periodic-Tasks)

|

||||||

|

- [Support Redis Cluster](https://github.com/hibiken/asynq/wiki/Redis-Cluster) for automatic sharding and high availability

|

||||||

|

- [Support Redis Sentinels](https://github.com/hibiken/asynq/wiki/Automatic-Failover) for high availability

|

||||||

|

- [Web UI](#web-ui) to inspect and remote-control queues and tasks

|

||||||

- [CLI](#command-line-tool) to inspect and remote-control queues and tasks

|

- [CLI](#command-line-tool) to inspect and remote-control queues and tasks

|

||||||

|

|

||||||

|

## Stability and Compatibility

|

||||||

|

|

||||||

|

**Status**: The library is currently undergoing **heavy development** with frequent, breaking API changes.

|

||||||

|

|

||||||

|

> ☝️ **Important Note**: Current major version is zero (`v0.x.x`) to accomodate rapid development and fast iteration while getting early feedback from users (_feedback on APIs are appreciated!_). The public API could change without a major version update before `v1.0.0` release.

|

||||||

|

|

||||||

## Quickstart

|

## Quickstart

|

||||||

|

|

||||||

First, make sure you are running a Redis server locally.

|

Make sure you have Go installed ([download](https://golang.org/dl/)). Version `1.13` or higher is required.

|

||||||

|

|

||||||

|

Initialize your project by creating a folder and then running `go mod init github.com/your/repo` ([learn more](https://blog.golang.org/using-go-modules)) inside the folder. Then install Asynq library with the [`go get`](https://golang.org/cmd/go/#hdr-Add_dependencies_to_current_module_and_install_them) command:

|

||||||

|

|

||||||

```sh

|

```sh

|

||||||

$ redis-server

|

go get -u github.com/hibiken/asynq

|

||||||

```

|

```

|

||||||

|

|

||||||

|

Make sure you're running a Redis server locally or from a [Docker](https://hub.docker.com/_/redis) container. Version `4.0` or higher is required.

|

||||||

|

|

||||||

Next, write a package that encapsulates task creation and task handling.

|

Next, write a package that encapsulates task creation and task handling.

|

||||||

|

|

||||||

```go

|

```go

|

||||||

@@ -64,23 +72,38 @@ import (

|

|||||||

|

|

||||||

// A list of task types.

|

// A list of task types.

|

||||||

const (

|

const (

|

||||||

EmailDelivery = "email:deliver"

|

TypeEmailDelivery = "email:deliver"

|

||||||

ImageProcessing = "image:process"

|

TypeImageResize = "image:resize"

|

||||||

)

|

)

|

||||||

|

|

||||||

|

type EmailDeliveryPayload struct {

|

||||||

|

UserID int

|

||||||

|

TemplateID string

|

||||||

|

}

|

||||||

|

|

||||||

|

type ImageResizePayload struct {

|

||||||

|

SourceURL string

|

||||||

|

}

|

||||||

|

|

||||||

//----------------------------------------------

|

//----------------------------------------------

|

||||||

// Write a function NewXXXTask to create a task.

|

// Write a function NewXXXTask to create a task.

|

||||||

// A task consists of a type and a payload.

|

// A task consists of a type and a payload.

|

||||||

//----------------------------------------------

|

//----------------------------------------------

|

||||||

|

|

||||||

func NewEmailDeliveryTask(userID int, tmplID string) *asynq.Task {

|

func NewEmailDeliveryTask(userID int, tmplID string) (*asynq.Task, error) {

|

||||||

payload := map[string]interface{}{"user_id": userID, "template_id": tmplID}

|

payload, err := json.Marshal(EmailDeliveryPayload{UserID: userID, TemplateID: tmplID})

|

||||||

return asynq.NewTask(EmailDelivery, payload)

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

return asynq.NewTask(TypeEmailDelivery, payload), nil

|

||||||

}

|

}

|

||||||

|

|

||||||

func NewImageProcessingTask(src, dst string) *asynq.Task {

|

func NewImageResizeTask(src string) (*asynq.Task, error) {

|

||||||

payload := map[string]interface{}{"src": src, "dst": dst}

|

payload, err := json.Marshal(ImageResizePayload{SourceURL: src})

|

||||||

return asynq.NewTask(ImageProcessing, payload)

|

if err != nil {

|

||||||

|

return nil, err

|

||||||

|

}

|

||||||

|

return asynq.NewTask(TypeImageResize, payload), nil

|

||||||

}

|

}

|

||||||

|

|

||||||

//---------------------------------------------------------------

|

//---------------------------------------------------------------

|

||||||

@@ -92,51 +115,42 @@ func NewImageProcessingTask(src, dst string) *asynq.Task {

|

|||||||

//---------------------------------------------------------------

|

//---------------------------------------------------------------

|

||||||

|

|

||||||

func HandleEmailDeliveryTask(ctx context.Context, t *asynq.Task) error {

|

func HandleEmailDeliveryTask(ctx context.Context, t *asynq.Task) error {

|

||||||

userID, err := t.Payload.GetInt("user_id")

|

var p EmailDeliveryPayload

|

||||||

if err != nil {

|

if err := json.Unmarshal(t.Payload(), &p); err != nil {

|

||||||

return err

|

return fmt.Errorf("json.Unmarshal failed: %v: %w", err, asynq.SkipRetry)

|

||||||

}

|

}

|

||||||

tmplID, err := t.Payload.GetString("template_id")

|

log.Printf("Sending Email to User: user_id=%d, template_id=%s", p.UserID, p.TemplateID)

|

||||||

if err != nil {

|

// Email delivery code ...

|

||||||

return err

|

|

||||||

}

|

|

||||||

fmt.Printf("Send Email to User: user_id = %d, template_id = %s\n", userID, tmplID)

|

|

||||||

// Email delivery logic ...

|

|

||||||

return nil

|

return nil

|

||||||

}

|

}

|

||||||

|

|

||||||

// ImageProcessor implements asynq.Handler interface.

|

// ImageProcessor implements asynq.Handler interface.

|

||||||

type ImageProcesser struct {

|

type ImageProcessor struct {

|

||||||

// ... fields for struct

|

// ... fields for struct

|

||||||

}

|

}

|

||||||

|

|

||||||

func (p *ImageProcessor) ProcessTask(ctx context.Context, t *asynq.Task) error {

|

func (processor *ImageProcessor) ProcessTask(ctx context.Context, t *asynq.Task) error {

|

||||||

src, err := t.Payload.GetString("src")

|

var p ImageResizePayload

|

||||||

if err != nil {

|

if err := json.Unmarshal(t.Payload(), &p); err != nil {

|

||||||

return err

|

return fmt.Errorf("json.Unmarshal failed: %v: %w", err, asynq.SkipRetry)

|

||||||

}

|

}

|

||||||

dst, err := t.Payload.GetString("dst")

|

log.Printf("Resizing image: src=%s", p.SourceURL)

|

||||||

if err != nil {

|

// Image resizing code ...

|

||||||

return err

|

|

||||||

}

|

|

||||||

fmt.Printf("Process image: src = %s, dst = %s\n", src, dst)

|

|

||||||

// Image processing logic ...

|

|

||||||

return nil

|

return nil

|

||||||

}

|

}

|

||||||

|

|

||||||

func NewImageProcessor() *ImageProcessor {

|

func NewImageProcessor() *ImageProcessor {

|

||||||

// ... return an instance

|

return &ImageProcessor{}

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

In your web application code, import the above package and use [`Client`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#Client) to put tasks on the queue.

|

In your application code, import the above package and use [`Client`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#Client) to put tasks on queues.

|

||||||

A task will be processed asynchronously by a background worker as soon as the task gets enqueued.

|

|

||||||

Scheduled tasks will be stored in Redis and will be enqueued at the specified time.

|

|

||||||

|

|

||||||

```go

|

```go

|

||||||

package main

|

package main

|

||||||

|

|

||||||

import (

|

import (

|

||||||

|

"log"

|

||||||

"time"

|

"time"

|

||||||

|

|

||||||

"github.com/hibiken/asynq"

|

"github.com/hibiken/asynq"

|

||||||

@@ -146,64 +160,70 @@ import (

|

|||||||

const redisAddr = "127.0.0.1:6379"

|

const redisAddr = "127.0.0.1:6379"

|

||||||

|

|

||||||

func main() {

|

func main() {

|

||||||

r := asynq.RedisClientOpt{Addr: redisAddr}

|

client := asynq.NewClient(asynq.RedisClientOpt{Addr: redisAddr})

|

||||||

c := asynq.NewClient(r)

|

defer client.Close()

|

||||||

defer c.Close()

|

|

||||||

|

|

||||||

// ------------------------------------------------------

|

// ------------------------------------------------------

|

||||||

// Example 1: Enqueue task to be processed immediately.

|

// Example 1: Enqueue task to be processed immediately.

|

||||||

// Use (*Client).Enqueue method.

|

// Use (*Client).Enqueue method.

|

||||||

// ------------------------------------------------------

|

// ------------------------------------------------------

|

||||||

|

|

||||||

t := tasks.NewEmailDeliveryTask(42, "some:template:id")

|

task, err := tasks.NewEmailDeliveryTask(42, "some:template:id")

|

||||||

err := c.Enqueue(t)

|

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Fatal("could not enqueue task: %v", err)

|

log.Fatalf("could not create task: %v", err)

|

||||||

}

|

}

|

||||||

|

info, err := client.Enqueue(task)

|

||||||

|

if err != nil {

|

||||||

|

log.Fatalf("could not enqueue task: %v", err)

|

||||||

|

}

|

||||||

|

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

|

||||||

|

|

||||||

|

|

||||||

// ------------------------------------------------------------

|

// ------------------------------------------------------------

|

||||||

// Example 2: Schedule task to be processed in the future.

|

// Example 2: Schedule task to be processed in the future.

|

||||||

// Use (*Client).EnqueueIn or (*Client).EnqueueAt.

|

// Use ProcessIn or ProcessAt option.

|

||||||

// ------------------------------------------------------------

|

// ------------------------------------------------------------

|

||||||

|

|

||||||

t = tasks.NewEmailDeliveryTask(42, "other:template:id")

|

info, err = client.Enqueue(task, asynq.ProcessIn(24*time.Hour))

|

||||||

err = c.EnqueueIn(24*time.Hour, t)

|

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Fatal("could not schedule task: %v", err)

|

log.Fatalf("could not schedule task: %v", err)

|

||||||

}

|

}

|

||||||

|

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

|

||||||

|

|

||||||

|

|

||||||

// ----------------------------------------------------------------------------

|

// ----------------------------------------------------------------------------

|

||||||

// Example 3: Set options to tune task processing behavior.

|

// Example 3: Set other options to tune task processing behavior.

|

||||||

// Options include MaxRetry, Queue, Timeout, Deadline, Unique etc.

|

// Options include MaxRetry, Queue, Timeout, Deadline, Unique etc.

|

||||||

// ----------------------------------------------------------------------------

|

// ----------------------------------------------------------------------------

|

||||||

|

|

||||||

c.SetDefaultOptions(tasks.ImageProcessing, asynq.MaxRetry(10), asynq.Timeout(time.Minute))

|

client.SetDefaultOptions(tasks.TypeImageResize, asynq.MaxRetry(10), asynq.Timeout(3*time.Minute))

|

||||||

|

|

||||||

t = tasks.NewImageProcessingTask("some/blobstore/url", "other/blobstore/url")

|

task, err = tasks.NewImageResizeTask("https://example.com/myassets/image.jpg")

|

||||||

err = c.Enqueue(t)

|

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Fatal("could not enqueue task: %v", err)

|

log.Fatalf("could not create task: %v", err)

|

||||||

}

|

}

|

||||||

|

info, err = client.Enqueue(task)

|

||||||

|

if err != nil {

|

||||||

|

log.Fatalf("could not enqueue task: %v", err)

|

||||||

|

}

|

||||||

|

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

|

||||||

|

|

||||||

// ---------------------------------------------------------------------------

|

// ---------------------------------------------------------------------------

|

||||||

// Example 4: Pass options to tune task processing behavior at enqueue time.

|

// Example 4: Pass options to tune task processing behavior at enqueue time.

|

||||||

// Options passed at enqueue time override default ones, if any.

|

// Options passed at enqueue time override default ones.

|

||||||

// ---------------------------------------------------------------------------

|

// ---------------------------------------------------------------------------

|

||||||

|

|

||||||

t = tasks.NewImageProcessingTask("some/blobstore/url", "other/blobstore/url")

|

info, err = client.Enqueue(task, asynq.Queue("critical"), asynq.Timeout(30*time.Second))

|

||||||

err = c.Enqueue(t, asynq.Queue("critical"), asynq.Timeout(30*time.Second))

|

|

||||||

if err != nil {

|

if err != nil {

|

||||||

log.Fatal("could not enqueue task: %v", err)

|

log.Fatalf("could not enqueue task: %v", err)

|

||||||

}

|

}

|

||||||

|

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

Next, create a worker server to process these tasks in the background.

|

Next, start a worker server to process these tasks in the background. To start the background workers, use [`Server`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#Server) and provide your [`Handler`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#Handler) to process the tasks.

|

||||||

To start the background workers, use [`Server`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#Server) and provide your [`Handler`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#Handler) to process the tasks.

|

|

||||||

|

|

||||||

You can optionally use [`ServeMux`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#ServeMux) to create a handler, just as you would with [`"net/http"`](https://golang.org/pkg/net/http/) Handler.

|

You can optionally use [`ServeMux`](https://pkg.go.dev/github.com/hibiken/asynq?tab=doc#ServeMux) to create a handler, just as you would with [`net/http`](https://golang.org/pkg/net/http/) Handler.

|

||||||

|

|

||||||

```go

|

```go

|

||||||

package main

|

package main

|

||||||

@@ -218,9 +238,9 @@ import (

|

|||||||

const redisAddr = "127.0.0.1:6379"

|

const redisAddr = "127.0.0.1:6379"

|

||||||

|

|

||||||

func main() {

|

func main() {

|

||||||

r := asynq.RedisClientOpt{Addr: redisAddr}

|

srv := asynq.NewServer(

|

||||||

|

asynq.RedisClientOpt{Addr: redisAddr},

|

||||||

srv := asynq.NewServer(r, asynq.Config{

|

asynq.Config{

|

||||||

// Specify how many concurrent workers to use

|

// Specify how many concurrent workers to use

|

||||||

Concurrency: 10,

|

Concurrency: 10,

|

||||||

// Optionally specify multiple queues with different priority.

|

// Optionally specify multiple queues with different priority.

|

||||||

@@ -230,12 +250,13 @@ func main() {

|

|||||||

"low": 1,

|

"low": 1,

|

||||||

},

|

},

|

||||||

// See the godoc for other configuration options

|

// See the godoc for other configuration options

|

||||||

})

|

},

|

||||||

|

)

|

||||||

|

|

||||||

// mux maps a type to a handler

|

// mux maps a type to a handler

|

||||||

mux := asynq.NewServeMux()

|

mux := asynq.NewServeMux()

|

||||||

mux.HandleFunc(tasks.EmailDelivery, tasks.HandleEmailDeliveryTask)

|

mux.HandleFunc(tasks.TypeEmailDelivery, tasks.HandleEmailDeliveryTask)

|

||||||

mux.Handle(tasks.ImageProcessing, tasks.NewImageProcessor())

|

mux.Handle(tasks.TypeImageResize, tasks.NewImageProcessor())

|

||||||

// ...register other handlers...

|

// ...register other handlers...

|

||||||

|

|

||||||

if err := srv.Run(mux); err != nil {

|

if err := srv.Run(mux); err != nil {

|

||||||

@@ -244,52 +265,52 @@ func main() {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

For a more detailed walk-through of the library, see our [Getting Started Guide](https://github.com/hibiken/asynq/wiki/Getting-Started).

|

For a more detailed walk-through of the library, see our [Getting Started](https://github.com/hibiken/asynq/wiki/Getting-Started) guide.

|

||||||

|

|

||||||

To Learn more about `asynq` features and APIs, see our [Wiki](https://github.com/hibiken/asynq/wiki) and [godoc](https://godoc.org/github.com/hibiken/asynq).

|

To learn more about `asynq` features and APIs, see the package [godoc](https://godoc.org/github.com/hibiken/asynq).

|

||||||

|

|

||||||

|

## Web UI

|

||||||

|

|

||||||

|

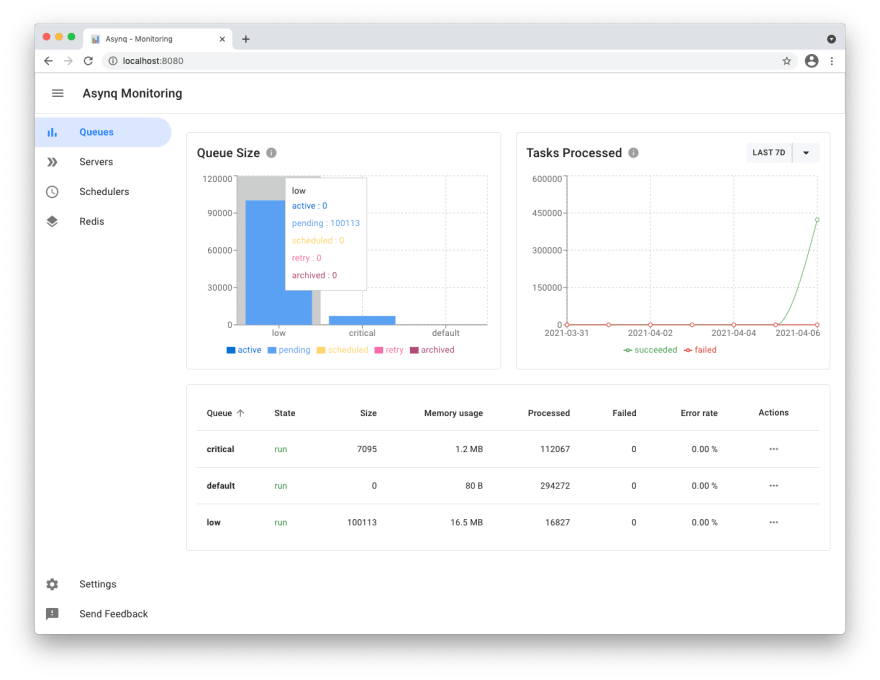

[Asynqmon](https://github.com/hibiken/asynqmon) is a web based tool for monitoring and administrating Asynq queues and tasks.

|

||||||

|

|

||||||

|

Here's a few screenshots of the Web UI:

|

||||||

|

|

||||||

|

**Queues view**

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

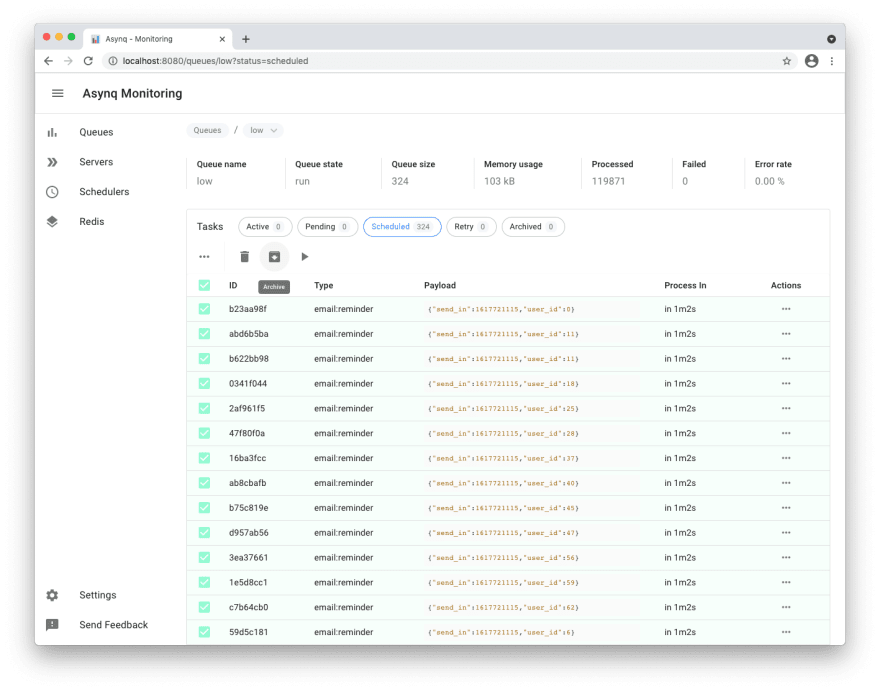

**Tasks view**

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

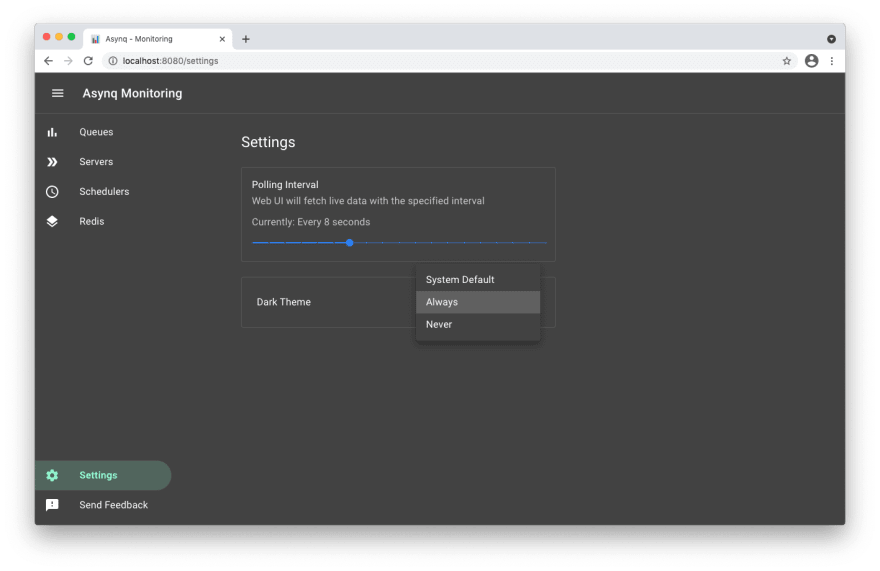

**Settings and adaptive dark mode**

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

For details on how to use the tool, refer to the tool's [README](https://github.com/hibiken/asynqmon#readme).

|

||||||

|

|

||||||

## Command Line Tool

|

## Command Line Tool

|

||||||

|

|

||||||

Asynq ships with a command line tool to inspect the state of queues and tasks.

|

Asynq ships with a command line tool to inspect the state of queues and tasks.

|

||||||

|

|

||||||

Here's an example of running the `stats` command.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

For details on how to use the tool, refer to the tool's [README](/tools/asynq/README.md).

|

|

||||||

|

|

||||||

## Installation

|

|

||||||

|

|

||||||

To install `asynq` library, run the following command:

|

|

||||||

|

|

||||||

```sh

|

|

||||||

go get -u github.com/hibiken/asynq

|

|

||||||

```

|

|

||||||

|

|

||||||

To install the CLI tool, run the following command:

|

To install the CLI tool, run the following command:

|

||||||

|

|

||||||

```sh

|

```sh

|

||||||

go get -u github.com/hibiken/asynq/tools/asynq

|

go get -u github.com/hibiken/asynq/tools/asynq

|

||||||

```

|

```

|

||||||

|

|

||||||

## Requirements

|

Here's an example of running the `asynq stats` command:

|

||||||

|

|

||||||

| Dependency | Version |

|

|

||||||

| -------------------------- | ------- |

|

|

||||||

| [Redis](https://redis.io/) | v2.8+ |

|

For details on how to use the tool, refer to the tool's [README](/tools/asynq/README.md).

|

||||||

| [Go](https://golang.org/) | v1.13+ |

|

|

||||||

|

|

||||||

## Contributing

|

## Contributing

|

||||||

|

|

||||||

We are open to, and grateful for, any contributions (Github issues/pull-requests, feedback on Gitter channel, etc) made by the community.

|

We are open to, and grateful for, any contributions (GitHub issues/PRs, feedback on [Gitter channel](https://gitter.im/go-asynq/community), etc) made by the community.

|

||||||

|

|

||||||

Please see the [Contribution Guide](/CONTRIBUTING.md) before contributing.

|

Please see the [Contribution Guide](/CONTRIBUTING.md) before contributing.

|

||||||

|

|

||||||

## Acknowledgements

|

|

||||||

|

|

||||||

- [Sidekiq](https://github.com/mperham/sidekiq) : Many of the design ideas are taken from sidekiq and its Web UI

|

|

||||||

- [RQ](https://github.com/rq/rq) : Client APIs are inspired by rq library.

|

|

||||||

- [Cobra](https://github.com/spf13/cobra) : Asynq CLI is built with cobra

|

|

||||||

|

|

||||||

## License

|

## License

|

||||||

|

|

||||||

Asynq is released under the MIT license. See [LICENSE](https://github.com/hibiken/asynq/blob/master/LICENSE).

|

Copyright (c) 2019-present [Ken Hibino](https://github.com/hibiken) and [Contributors](https://github.com/hibiken/asynq/graphs/contributors). `Asynq` is free and open-source software licensed under the [MIT License](https://github.com/hibiken/asynq/blob/master/LICENSE). Official logo was created by [Vic Shóstak](https://github.com/koddr) and distributed under [Creative Commons](https://creativecommons.org/publicdomain/zero/1.0/) license (CC0 1.0 Universal).

|

||||||

|

|||||||

340

asynq.go

340

asynq.go

@@ -10,35 +10,163 @@ import (

|

|||||||

"net/url"

|

"net/url"

|

||||||

"strconv"

|

"strconv"

|

||||||

"strings"

|

"strings"

|

||||||

|

"time"

|

||||||

|

|

||||||

"github.com/go-redis/redis/v7"

|

"github.com/go-redis/redis/v7"

|

||||||

|

"github.com/hibiken/asynq/internal/base"

|

||||||

)

|

)

|

||||||

|

|

||||||

// Task represents a unit of work to be performed.

|

// Task represents a unit of work to be performed.

|

||||||

type Task struct {

|

type Task struct {

|

||||||

// Type indicates the type of task to be performed.

|

// typename indicates the type of task to be performed.

|

||||||

Type string

|

typename string

|

||||||

|

|

||||||

// Payload holds data needed to perform the task.

|

// payload holds data needed to perform the task.

|

||||||

Payload Payload

|

payload []byte

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func (t *Task) Type() string { return t.typename }

|

||||||

|

func (t *Task) Payload() []byte { return t.payload }

|

||||||

|

|

||||||

// NewTask returns a new Task given a type name and payload data.

|

// NewTask returns a new Task given a type name and payload data.

|

||||||

//

|

func NewTask(typename string, payload []byte) *Task {

|

||||||

// The payload values must be serializable.

|

|

||||||

func NewTask(typename string, payload map[string]interface{}) *Task {

|

|

||||||

return &Task{

|

return &Task{

|

||||||

Type: typename,

|

typename: typename,

|

||||||

Payload: Payload{payload},

|

payload: payload,

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// A TaskInfo describes a task and its metadata.

|

||||||

|

type TaskInfo struct {

|

||||||

|

// ID is the identifier of the task.

|

||||||

|

ID string

|

||||||

|

|

||||||

|

// Queue is the name of the queue in which the task belongs.

|

||||||

|

Queue string

|

||||||

|

|

||||||

|

// Type is the type name of the task.

|

||||||

|

Type string

|

||||||

|

|

||||||

|

// Payload is the payload data of the task.

|

||||||

|

Payload []byte

|

||||||

|

|

||||||

|

// State indicates the task state.

|

||||||

|

State TaskState

|

||||||

|

|

||||||

|

// MaxRetry is the maximum number of times the task can be retried.

|

||||||

|

MaxRetry int

|

||||||

|

|

||||||

|

// Retried is the number of times the task has retried so far.

|

||||||

|

Retried int

|

||||||

|

|

||||||

|

// LastErr is the error message from the last failure.

|

||||||

|

LastErr string

|

||||||

|

|

||||||

|

// LastFailedAt is the time time of the last failure if any.

|

||||||

|

// If the task has no failures, LastFailedAt is zero time (i.e. time.Time{}).

|

||||||

|

LastFailedAt time.Time

|